Developing an Effective

Evaluation Plan

Setting the course for

effective program evaluation

Acknowledgements

This workbook was developed by the Centers for Disease Control and Prevention’s Oce on

Smoking and Health (OSH) and Division of Nutrition, Physical Activity and Obesity (DNPAO)

as part of a series of technical assistance workbooks for use by program managers, and

evaluators. The workbooks are intended to oer guidance and facilitate capacity building on a

wide range of evaluation topics. We encourage users to adapt the tools and resources in this

workbook to meet their program’s evaluation needs.

This workbook applies the CDC Framework for Program Evaluation in Public Health

(www.cdc.gov/eval). The Framework lays out a six-step process for the decisions and activities

involved in conducting an evaluation. While the Framework provides steps for program

evaluation, the steps are not always linear and represent a more back-and-forth eort; some

can be completed concurrently. In some cases, it makes more sense to skip a step and come

back to it. The important thing is that the steps are considered within the specic context of

your program.

The following individuals from the Centers for Disease Control and Prevention, Oce

on Smoking and Health (OSH), and Division of Nutrition, Physical Activity, and Obesity

(DNPAO), were primary contributors to the preparation of this publication:

S René Lavinghouze, MA, Evaluation Team Lead/OSH

Jan Jernigan, PhD, Senior Evaluation Scientist/DNPAO

The following also contributed to the preparation of this publication:

LaTisha Marshall, MPH, Health Scientist/OSH; LCDR Adriane Niare, MPH CHES, Health

Scientist/OSH; Kim Snyder, MPH, Contractor, ICF International; Marti Engstrom, MA, Evaluation

Team Lead/OSH; Rosanne Farris, PhD, RD, Branch Chief/DNPAO

We also gratefully acknowledge the contributions ICF International provided in

developing the graphics and copy editing.

For more information, contact: René Lavinghouze, Senior Evaluation Scientist in OSH at

[email protected], or Jan Jernigan, Senior Evaluation Scientist in DNPAO at JJernigan1@

cdc.gov.

Suggested Citation: Developing an Eective Evaluation Plan: Setting the Course for Eective

Program Evaluation. Atlanta, Georgia: Centers for Disease Control and Prevention, National

Center for Chronic Disease Prevention and Health Promotion, Oce on Smoking and Health;

Division of Nutrition, Physical Activity and Obesity, 2011.

Table of Contents

Part I: Developing Your Evaluation Plan ........................................................................1

Who is the audience for this workbook?

................................................................... 1

What is an evaluation plan?

....................................................................................1

Why do you want an evaluation plan?

......................................................................2

How do you write an evaluation plan?

......................................................................3

What are the key steps in developing an evaluation plan using

CDC’s Framework for Program Evaluation?

..............................................................4

The Process of Participatory Evaluation Planning

......................................................6

Step 1: Engage Stakeholders

.............................................................................6

Defining the Purpose in the Plan

..................................................................6

The ESW: Why should you engage stakeholders in developing

the evaluation plan?

....................................................................................7

Who are the program’s stakeholders?

.......................................................... 8

How do you use an ESW to develop an evaluation plan?................................8

How are stakeholder’s roles described in the plan?

....................................... 9

Step 2: Describe the Program

..........................................................................12

Shared Understanding of the Program

.......................................................12

Narrative Description

................................................................................12

Logic Model

.............................................................................................13

Stage of Development

...............................................................................14

Step 3: Focus the Evaluation

............................................................................18

Developing Evaluation Questions

...............................................................19

Budget and Resources

.............................................................................. 21

Step 4: Planning for Gathering Credible Evidence ..............................................23

Choosing the Appropriate Methods

............................................................24

Credible Evidence

..................................................................................... 25

Measurement

...........................................................................................25

Data Sources and Methods

.......................................................................26

Roles and Responsibilities

......................................................................... 27

Evaluation Plan Methods Grid

.................................................................... 27

Budget

.....................................................................................................28

Step 5: Planning for Conclusions

......................................................................30

Step 6: Planning for Dissemination and Sharing of Lessons Learned

...................33

Communication and Dissemination Plans

................................................... 34

Ensuring Use

............................................................................................ 37

One Last Note

..........................................................................................37

Pulling It All Together

............................................................................................40

References...........................................................................................................43

Part II: Exercise, Worksheets, and Tools

......................................................................44

Step 1: 1.1 Stakeholder Mapping Exercise

.............................................................45

Step 1: 1.2 Evaluation Purpose Exercise

................................................................50

Step 1: 1.3 Stakeholder Inclusion and Communication Plan Exercise

.......................53

Step 1: 1.4 Stakeholder Information Needs Exercise ................................................... 55

Step 2: 2.1 Program Stage of Development Exercise ..............................................57

Step 3: 3.1 Focus the Evaluation Exercise

..............................................................62

Step 4: 4.1 Evaluation Plan Methods Grid Exercise

.................................................68

Step 4: 4.2 Evaluation Budget Exercise

.................................................................. 73

Step 5: 5.1 Stakeholder Interpretation Meeting Exercise..........................................76

Step 6: 6.1 Reporting Checklist Exercise

................................................................ 80

Tools and Templates: Checklist for Ensuring Effective Evaluation Reports.............80

Step 6: 6.2 Communicating Results Exercise

..........................................................82

Outline: 7.1 Basic Elements of an Evaluation Plan ...................................................88

Outline: 7.2 Evaluation Plan Sketchpad

.................................................................. 88

Logic Model Examples

..........................................................................................98

OSH Logic Models Example

.............................................................................98

Preventing Initiation of Tobacco Use Among Young People

........................... 98

Eliminating Nonsmokers’ Exposure to Secondhand Smoke

..........................99

Promoting Quitting Among Adults and Young People

.................................100

DNPAO Logic Model Example

......................................................................... 101

State NPAO Program—Detailed Logic Model

...........................................101

Resources

................................................................................................................. 102

Web Resources

..................................................................................................102

Making your ideas stick, reporting, and program planning

..................................... 104

Qualitative Methods

............................................................................................ 104

Quantitative Methods

.......................................................................................... 105

Evaluation Use

....................................................................................................105

OSH Evaluation Resources

..................................................................................105

DNPAO Evaluation Resources

.............................................................................. 108

Figures

Figure 1: CDC Framework for Program Evaluation in Public Health ........................ 5

Figure 2: Sample Logic Model

.......................................................................... 13

Figure 3.1: Stage of Development by Logic Model Category

.................................. 15

Figure 3.2: Stage of Development by Logic Model Category Example

..................... 15

Figure 4.1: Evaluation Plan Methods Grid Example

...............................................27

Figure 4.2: Evaluation Plan Methods Grid Example

...............................................28

Figure 5:

Communication Plan Table .................................................................36

Acronyms

BRFSS Behavioral Risk Factor Surveillance System

CDC Centers for Disease Control and Prevention

DNPAO Division of Nutrition, Physical Activity, and Obesity

ESW Evaluation Stakeholder Workgroup

NIDRR National Institute on Disability and Rehabilitation Research

OSH Office on Smoking and Health

PRAMS Pregnancy Risk Assessment Monitoring System

YRBS Youth Risk Behavior Surveillance

Part I: Developing Your Evaluation Plan

WHO IS THE AUDIENCE FOR THIS WORKBOOK?

The purpose of this workbook is to help public health program managers, administrators,

and evaluators develop a joint understanding of what constitutes an evaluation plan, why it

is important, and how to develop an effective evaluation plan in the context of the planning

process. This workbook is intended to assist in developing an evaluation plan but is not

intended to serve as a complete resource on how to implement program evaluation. Rather,

it is intended to be used along with other evaluation resources, such as those listed in the

Resource Section of this workbook. The workbook was written by the staff of the Office

on Smoking and Health (OSH) and the Division of Nutrition, Physical Activity, and Obesity

(DNPAO) at the Centers for Disease Control and Prevention (CDC), and ICF International.

However, the content and steps for writing an evaluation plan can be applied to any public

health program or initiative. Part I of this workbook defines and describes how to write an

effective evaluation plan. Part II of this workbook includes exercises, worksheets, tools, and

a Resource Section to facilitate program staff and evaluation stakeholder workgroup (ESW)

thinking through the concepts presented in Part I of this workbook.

WHAT IS AN EVALUATION PLAN?

An evaluation plan is a written document that describes

how you will monitor and evaluate your program, as well

as how you intend to use evaluation results for program

improvement and decision making. The evaluation plan

clarifies how you will describe the “What,” the “How,”

and the “Why It Matters” for your program.

The “What” reflects the description of your

program and how its activities are linked with the

intended effects. It serves to clarify the program’s

purpose and anticipated outcomes.

The “How” addresses the process for implementing a program and provides

information about whether the program is operating with fidelity to the program’s

design. Additionally, the “How” (or process evaluation), along with output and/or

short-term outcome information, helps clarify if changes should be made during

implementation.

An evaluation plan is a written

document that describes how

you will monitor and evaluate

your program, so that you will

be able to describe the “What”,

the “How”, and the “Why It

Matters” for your program

and use evaluation results for

program improvement and

decision making.

1

2

|

Developing an Effective Evaluation Plan

The “Why It Matters” provides the rationale for your program and the impact it has

on public health. This is also sometimes referred to as the “so what” question.

Being able to demonstrate that your program has made a difference is critical to

program sustainability.

An evaluation plan is similar to a roadmap. It clarifies the steps needed to assess the

processes and outcomes of a program. An effective evaluation plan is more than a

column of indicators added to your program’s work plan. It is a dynamic tool (i.e., a “living

document”) that should be updated on an ongoing basis to reflect program changes and

priorities over time. An evaluation plan serves as a bridge between evaluation and program

planning by highlighting program goals, clarifying measurable program objectives, and

linking program activities with intended outcomes.

WHY DO YOU WANT AN EVALUATION PLAN?

Just as using a roadmap facilitates progress on a long journey, an evaluation plan can

clarify what direction your evaluation should take based on priorities, resources, time, and

skills needed to accomplish the evaluation. The process of developing an evaluation plan

in cooperation with an evaluation workgroup of stakeholders will foster collaboration and

a sense of shared purpose. Having a written evaluation plan will foster transparency and

ensure that stakeholders are on the same page with regards to the purpose, use, and users

of the evaluation results. Moreover, use of evaluation results is not something that can be

hoped or wished for but must be planned, directed, and intentional (Patton, 2008). A written

plan is one of your most effective tools in your evaluation tool box.

A written evaluation plan can—

create a shared understanding of the purpose(s), use, and users of the evaluation

results,

foster program transparency to stakeholders and decision makers,

increase buy-in and acceptance of methods,

connect multiple evaluation activities—this is especially useful when a program

employs different contractors or contracts,

serve as an advocacy tool for evaluation resources based on negotiated priorities

and established stakeholder and decision maker information needs,

help to identify whether there are sufficient program resources and time to

accomplish desired evaluation activities and answer prioritized evaluation questions,

assist in facilitating a smoother transition when there is staff turnover,

facilitate evaluation capacity building among partners

and stakeholders,

3

provide a multi-year comprehensive document that makes explicit everything from

stakeholders to dissemination to use of results, and

facilitate good evaluation practice.

There are several critical elements needed to ensure that your evaluation plan lives up to its

potential. These elements include ensuring (1) that your plan is collaboratively developed

with a stakeholder workgroup, (2) that it is responsive to program changes and priorities,

(3) that it covers multiple years if your project is ongoing, and (4) that it addresses your

entire program rather than focusing on just one funding source or objective/activity. You

will, by necessity, focus the evaluation based on feasibility, stage of development, ability

to consume information, and other priorities that will be discussed in Steps 3 and 4 in this

workbook. However, during the planning phase, your entire program should be considered

by the evaluation group.

HOW DO YOU WRITE AN EVALUATION PLAN?

This workbook is organized by describing the elements of the evaluation plan within

the context of using the CDC’s Framework for Program Evaluation in Public Health

(http://www.cdc.gov/eval/) and the planning process. The elements of an evaluation plan

that will be discussed in this workbook include:

Title page: Contains an easily identifiable program name, dates covered, and basic

focus of the evaluation.

Intended use and users: Fosters transparency about the purpose(s) of the

evaluation and identifies who will have access to evaluation results. It is important

to build a market for evaluation results from the beginning. Clarifying the primary

intended users, the members of the stakeholder evaluation workgroup, and the

purpose(s) of the evaluation will help to build this market.

Program description: Provides the opportunity for building a shared understanding

of the theory of change driving the program. This section often includes a logic

model and a description of the stage of development of the program in addition to a

narrative description.

Evaluation focus: Provides the opportunity to document how the evaluation focus

will be narrowed and the rationale for the prioritization process. Given that there are

never enough resources or time to answer every evaluation question, it is critical to

work collaboratively to prioritize the evaluation based on a shared understanding

of the theory of change identified in the logic model, the stage of development

4

|

Developing an Effective Evaluation Plan

of the program, the intended uses of the evaluation, as well as feasibility issues.

This section should delineate the criteria for evaluation prioritization and include a

discussion of feasibility and efficiency.

Methods: Identifies evaluation indicators and performance measures, data sources

and methods, as well as roles and responsibilities. This section provides a clear

description of how the evaluation will be implemented to ensure credibility of

evaluation information.

Analysis and interpretation plan: Clarifies how information will be analyzed and

describes the process for interpretation of results. This section describes who will

get to see interim results, whether there will be a stakeholder interpretation meeting

or meetings, and methods that will be used to analyze the data.

Use, dissemination, and sharing plan: Describes plans for use of evaluation

results and dissemination of evaluation findings. Clear, specific plans for evaluation

use should be discussed from the beginning. This section should include a broad

overview of how findings are to be used as well as more detailed information about

the intended modes and methods for sharing results with stakeholders. This is a

critical but often neglected section of the evaluation plan.

WHAT ARE THE KEY STEPS IN DEVELOPING AN

EVALUATION PLAN USING CDC’S FRAMEWORK FOR

PROGRAM EVALUATION?

CDC’s Framework for Program Evaluation in Public Health (1999) is a guide to effectively

evaluate public health programs and use the findings for program improvement and

decision making. While the framework is described in terms of steps, the actions are not

always linear and are often completed in a back-and-forth effort that is cyclical in nature.

Similar to the framework, the development of an evaluation plan is an ongoing process.

You may need to revisit a step during the process and complete other discrete steps

concurrently. Within each step of the framework, there are important components that are

useful to consider in the creation of an evaluation plan.

5

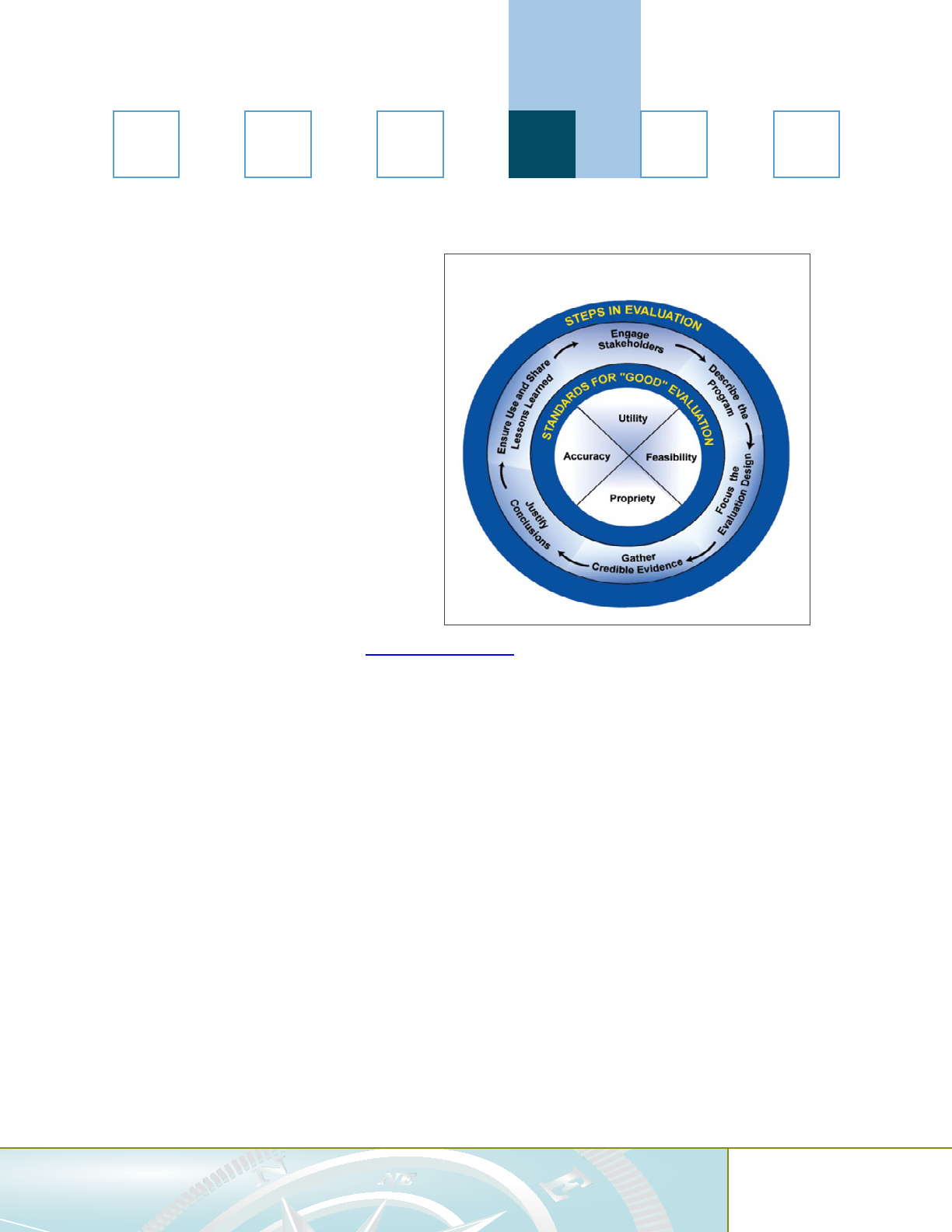

Figure 1: CDC Framework for Program Evaluation in Public Health

Steps:

1. Engage stakeholders.

2. Describe the

program.

3. Focus the evaluation

design.

4. Gather credible

evidence.

5. Justify conclusions.

6. Ensure use and share

lessons learned.

In addition to CDC’s

Framework for Program

Evaluation in Public Health

there are evaluation

standards that will enhance

the quality of evaluations by

guarding against potential

mistakes or errors in

practice. The evaluation standards are grouped around four important attributes: utility,

feasibility, propriety, and accuracy as indicated by the inner circle in Figure 1.

Utility: Serve information needs of intended users.

Feasibility: Be realistic, prudent, diplomatic,

and frugal.

Propriety: Behave legally, ethically, and with due

regard for the welfare of those involved and those

affected.

Accuracy: Evaluation is comprehensive and

grounded in the data.

(The Joint Committee on Standards for Educational Evaluation, 1994)

CDC’s Framework for Program Evaluation

It is critical to remember that

these standards apply to

all steps and phases of the

evaluation plan.

6

|

Developing an Effective Evaluation Plan

THE PROCESS OF PARTICIPATORY EVALUATION PLANNING

Step 1: Engage Stakeholders

Defining the Purpose in the Plan

Identifying the purpose of the evaluation is equally as important as identifying the end

users or stakeholders who will be part of a consultative group. These two aspects of the

evaluation serve as a foundation for evaluation planning, focus, design, and interpretation

and use of results. The purpose of an evaluation influences the identification of stakeholders

for the evaluation, selection of specific evaluation questions, and the timing of evaluation

activities. It is critical that the program is transparent about intended purposes of the

evaluation. If evaluation results will be used to determine whether a program should be

continued or eliminated, stakeholders should know this up front. The stated purpose of the

evaluation drives the expectations and sets the boundaries for what the evaluation can and

cannot deliver. In any single evaluation, and especially in a multi-year plan, more than one

purpose may be identified; however, the primary purpose can influence resource allocation,

use, stakeholders included, and more. Purpose priorities in the plan can help establish the

link between purposes and intended use of evaluation information. While there are many

ways of stating the identified purpose(s) of the evaluation, they generally fall into three

primary categories:

1. Rendering judgments—accountability

2. Facilitating improvements—program development

3. Knowledge generation—transferability

(Patton, 2008)

An Evaluation Purpose identification tool/worksheet is provided in Part II, Section

1.2 to assist you with determining intended purposes for your evaluation.

3 4 5 6

1

2

7

1

2 3 4 5 6

The ESW: Why should you engage stakeholders in

developing the evaluation plan?

A primary feature of an evaluation plan is the identification of

an ESW, which includes members who have a stake or vested

interest in the evaluation findings, those who are the intended

users who can most directly benefit from the evaluation

(Patton, 2008; Knowlton, Philips, 2009), as well as others who

have a direct or indirect interest in program implementation.

Engaging stakeholders in the ESW enhances intended users’

understanding and acceptance of the utility of evaluation

information. Stakeholders are much more likely to buy into and

support the evaluation if they are involved in the evaluation

process from the beginning. Moreover, to ensure that the

information collected, analyzed, and reported successfully

meets the needs of the program and stakeholders, it is best

to work with the people who will be using this information

throughout the entire process.

Engaging stakeholders in an evaluation can have many

benefits. In general, stakeholders include people who will use

the evaluation results, support or maintain the program, or who

are affected by the program activities or evaluation results.

Stakeholders can help—

determine and prioritize key evaluation questions,

pretest data collection instruments,

facilitate data collection,

implement evaluation activities,

increase credibility of analysis and interpretation of

evaluation information, and

ensure evaluation results are used.

A Stakeholder Information Needs identification exercise is

provided in Part II, Section 1.4 to assist you with determining

stakeholder information needs.

The ESW is comprised

of members who have a

stake or vested interest

in the evaluation

findings and can most

directly benefit from

the evaluation. These

members represent

the primary users

of the evaluation

results and generally

act as a consultative

group throughout

the entire planning

process, as well as

the implementation

of the evaluation.

Additionally, members

sometimes facilitate the

implementation and/or

the dissemination of

results. Examples

include promoting

responses to surveys,

in-kind support

for interviews, and

interpretation meetings.

The members can even

identify resources to

support evaluation

efforts. The exact

nature and roles of

group members is up

to you, but roles should

be explicitly delineated

and agreed to in the

evaluation plan.

8

|

Developing an Effective Evaluation Plan

Several questions pertaining to stakeholders may arise among program staff, including:

Who are the program’s stakeholders?

How can we work with all

of our stakeholders?

How are stakeholders’ role(s) described in the plan?

This section will help programs address these and other questions about stakeholders and

their roles in the evaluation to guide them in writing an effective evaluation plan.

Who are the program’s stakeholders?

The first question to answer when the program begins to write its evaluation plan is to

decide which stakeholders to include. Stakeholders are consumers of the evaluation results.

As consumers, they will have a vested interest in the results of the evaluation. In general,

stakeholders are those who are 1) interested in the program and would use evaluation

results, such as clients, community groups, and elected officials; 2) those who are involved

in running the program, such as program staff, partners, management, the funding source,

and coalition members; and 3) those who are served by the program, their families, or the

general public. Others may also be included as these categories are not exclusive.

How do you use an ESW to develop an evaluation plan?

It is often said of public health programs, “everyone is your stakeholder.” Stakeholders will

often have diverse and, at times, competing interests. Given that a single evaluation cannot

answer all possible evaluation questions raised by diverse groups it will be critical that the

prioritization process is outlined in the evaluation plan and that the stakeholder groups

represented are identified.

It is suggested that the program enlist the aid of an ESW of 8 to 10 members that

represents the stakeholders who have the greatest stake or vested interest in the

evaluation (Centers for Disease Control, 2008). These stakeholders, or primary intended

users, will serve in a consultative role on all phases of the evaluation. As members of

the ESW, they will be an integral part of the entire evaluation process from the initial

design phase to interpretation, dissemination, and ensuring use. Stakeholders will play

a major role in the program’s evaluation, including consultation and possibly even data

collection, interpretation, and decision making based on the evaluation results. Sometimes

stakeholders can have competing interests that may come to light in the evaluation

planning process. It is important to explore agendas in the beginning and come to a shared

1

2 3 4 5 6

9

1

2 3 4 5 6

understanding of roles and responsibilities, as well as the purposes of the evaluation. It is

important that both the program and the ESW understand and agree to the importance and

role of the workgroup in this process.

In order to meaningfully engage your stakeholders, you will need to allow time for resolving

conflicts and coming to a shared understanding of the program and evaluation. However,

the time is worth the effort and leads toward a truly participatory, empowerment approach

to evaluation.

How are stakeholder’s roles described in the plan?

It is important to document information within your written evaluation plan based on the

context of your program. For the ESW to be truly integrated into the process, ideally, they

will be identified in the evaluation plan. The form this takes may vary based on program

needs. If it is important politically, a program might want to specifically name each member

of the workgroup, their affiliation, and specific role(s) on the workgroup. If a workgroup

is designed with rotating membership by group, then the program might just list the

groups represented. For example, a program might have a workgroup that is comprised

of members that represent funded programs (three members), non-funded programs

(one member), and national partners (four members) or a workgroup that is comprised

of members that represent state programs (two members), community programs (five

members), and external evaluation expertise (two members). Being transparent about

the role and purpose of the ESW can facilitate buy-in for evaluation results from those

who did not participate in the evaluation—especially in situations where the evaluation is

implemented by internal staff members. Another by-product of workgroup membership is

that stakeholders and partners increase their capacity for evaluation activities and increase

their ability to be savvy consumers of evaluation information. This can have downstream

impacts on stakeholder’s and partner’s programs such as program improvement and

timely, informed decision making. A stakeholder inclusion chart or table can be a useful tool

to include in your evaluation plan.

A Stakeholder Mapping exercise and engagement tool/worksheet is provided in Part II,

Sections 1.1 and 1.1b to assist you with planning for your evaluation workgroup.

10

|

Developing an Effective Evaluation Plan

The process for stakeholder engagement should also be described in other steps related to

the development of the evaluation plan, which may include:

Step 2: Describe the program. A shared understanding of the program and what

the evaluation can and cannot deliver is essential to the success of implementation of

evaluation activities and use of evaluation results. The program and stakeholders must

agree upon the logic model, stage of development description, and purpose(s) of the

evaluation.

Step 3: Focus the evaluation. Understanding the purpose of the evaluation and the

rationale for prioritization of evaluation questions is critical for transparency and acceptance

of evaluation findings. It is essential that the evaluation address those questions of greatest

need to the program and priority users of the evaluation.

Step 4: Planning for gathering credible evidence. Stakeholders have to accept that

the methods selected are appropriate to the questions asked and that the data collected

are credible or the evaluation results will not be accepted or used. The market for and

acceptance of evaluation results begins in the planning phase. Stakeholders can inform the

selection of appropriate methods.

Step 5: Planning for conclusions. Stakeholders should inform the analysis

and interpretation of findings and facilitate the development of conclusions and

recommendations. This in turn will facilitate the acceptance and use of the evaluation

results by other stakeholder groups. Stakeholders can help determine if and when

stakeholder interpretation meetings should be conducted.

Step 6: Planning for dissemination and sharing of lessons learned. Stakeholders

should inform the translation of evaluation results into practical applications and actively

participate in the meaningful dissemination of lessons learned. This will facilitate ensuring

use of the evaluation. Stakeholders can facilitate the development of an intentional,

strategic communication and dissemination plan within the evaluation plan.

1

2 3 4 5 6

11

1

2 3 4 5 6

EVALUATION PLAN TIPS FOR STEP 1

Identify intended users who can directly benefit from and use the evaluation

results.

Identify a evaluation stakeholder workgroup of 8 to 10 members.

Engage stakeholders throughout the plan development process as well as the

implementation of the evaluation.

Identify intended purposes of the evaluation.

Allow for adequate time to meaningfully engage the evaluation stakeholder

workgroup.

EVALUATION TOOLS AND RESOURCES FOR STEP 1:

1.1 Stakeholder Mapping Exercise

1.1b Stakeholder Mapping Exercise Example

1.2 Evaluation Purpose Exercise

1.3 Stakeholder Inclusion and Communication Plan Exercise

1.4 Stakeholder Information Needs

AT THIS POINT IN YOUR PLAN, YOU HAVE—

identified the primary users of the evaluation,

created the evaluation stakeholder workgroup, and

defined the purposes of the evaluation.

12

|

Developing an Effective Evaluation Plan

2 3 4 5 6

1

Step 2: Describe the Program

Shared Understanding of the Program

The next step in the CDC Framework and the evaluation

plan is to describe the program. A program description

clarifies the program’s purpose, stage of development,

activities, capacity to improve health, and implementation

context. A shared understanding of the program and what

the evaluation can and cannot deliver is essential to the

successful implementation of evaluation activities and

use of evaluation results. The program and stakeholders must agree upon the logic model,

stage of development description, and purpose(s) of the evaluation. This work will set the

stage for identifying the program evaluation questions, focusing the evaluation design, and

connecting program planning and evaluation.

Narrative Description

A narrative description helps ensure a full and complete shared understanding of the

program. A logic model may be used to succinctly synthesize the main elements of a

program. While a logic model is not always necessary, a program narrative is. The program

description is essential for focusing the evaluation design and selecting the appropriate

methods. Too often groups jump to evaluation methods before they even have a grasp

of what the program is designed to achieve or what the evaluation should deliver. Even

though much of this will have been included in your funding application, it is good practice

to revisit this description with your ESW to ensure a shared understanding and that the

program is still being implemented as intended. The description will be based on your

program’s objectives and context but most descriptions include at a minimum:

A statement of need to identify the health issue addressed

Inputs or program resources available to implement program activities

Program activities linked to program outcomes through theory or best practice

program logic

Stage of development of the program to reflect program maturity

Environmental context

within which a program is implemented

A program description clarifies

the program’s purpose, stage

of development, activities,

capacity to improve health,

and implementation context.

13

1

3 4 5 6

2

Logic Model

The description section often includes a logic model to visually show the link between

activities and intended outcomes. It is helpful to review the model with the ESW to ensure

a shared understanding of the model and that the logic model is still an accurate and

complete reflection of your program. The logic model should identify available resources

(inputs), what the program is doing (activities), and what you hope to achieve (outcomes).

You might also want to articulate any challenges you face (the program’s context or

environment). Figure 2 illustrates the basic components of a program logic model. As you

view the logic model from left to right, the further away from the intervention the more time

needed to observe outcomes. A major challenge in evaluating chronic disease prevention

and health promotion programs is one of attribution versus contribution and the fact that

distal outcomes may not occur in close proximity to the program interventions or policy

change. In addition, given the complexities of dynamic implementation environments,

realized impacts may differ from intended impacts. However, the rewards of understanding

the proximal and distal impacts of the program intervention often outweigh the challenges.

Logic model elements include:

Inputs: Resources necessary for program implementation

Activities: The actual interventions that the program implements in order to achieve health

outcomes

Outputs: Direct products obtained as a result of program activities

Outcomes (short-term, intermediate, long-term, distal): The changes, impacts, or results of

program implementation (activities and outputs)

Figure 2: Sample Logic Model

14

|

Developing an Effective Evaluation Plan

2 3 4 5 6

1

Stage of Development

Another activity that will be needed to fully describe your program and prepare you to

focus your evaluation is an accurate assessment of the stage of development of the

program. The developmental stages that programs typically move through are planning,

implementation, and maintenance. In the example of a policy or environmental initiative,

the stages might look somewhat like this:

1. Assess environment and assets.

2. Policy or environmental change is in development.

3. The policy or environmental change has not yet been approved.

4. The policy or environmental change has been approved but not implemented.

5. The policy or environmental change has been in effect for less than 1 year.

6. The policy or environmental change has been in effect for 1 year or longer.

Steps 1 through 3 would typically fall under the planning stages, Steps 4 and 5 under

implementation, and Step 6 under maintenance. It is important to consider a developmental

model because programs are dynamic and evolve over time. Programs are seldom fixed

in stone and progress is affected by many aspects of the political and economic context.

When it comes to evaluation, the stages are not always a “once-and-done” sequence of

events. When a program has progressed past the initial planning stage, it may experience

occasions where environment and asset assessment is still needed. Additionally, in a

multi-year plan, the evaluation should consider future evaluation plans to prepare datasets

and baseline information for evaluation projects considering more distal impacts and

outcomes. This is an advantage of completing a multi-year evaluation plan with your

ESW—preparation!

The stage of development conceptual model is complementary to the logic model. Figure

3.1 shows how general program evaluation questions are distinguished by both logic model

categories and the developmental stage of the program. This places evaluation within the

appropriate stage of program development (planning, implementation, and maintenance).

The model offers suggested starting points for asking evaluation questions within the

logic model while respecting the developmental stage of the program. This will prepare

the program and the workgroup to focus the evaluation appropriately based on program

maturity and priorities.

15

1

3 4 5 6

2

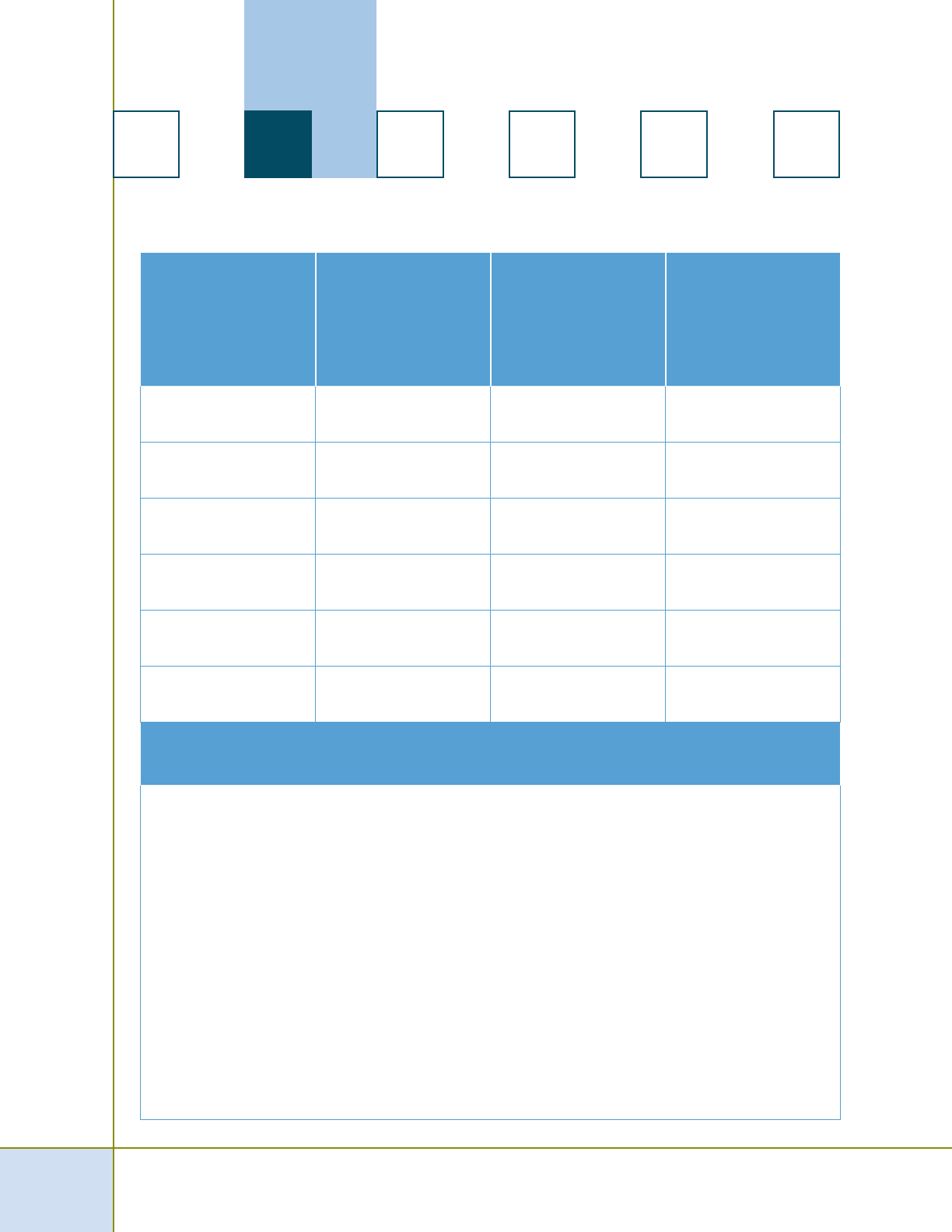

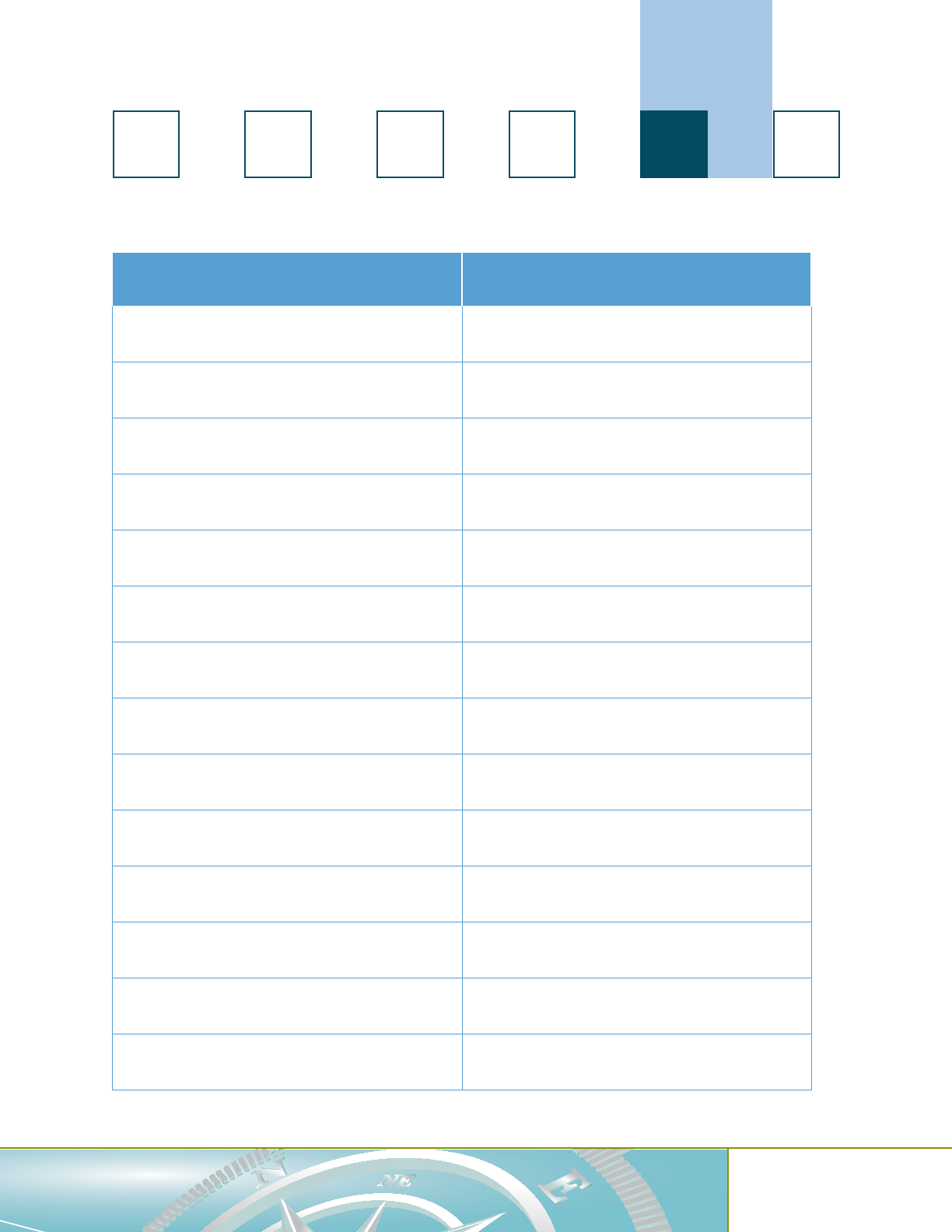

Figure 3.1: Stage of Development by Logic Model Category

Developmental Stage

Program

Planning

Program

Implementation

Program

Maintenance

Logic Model Category Inputs and Activities

Outputs and Short-term

Outcomes

Intermediate and

Long-term Outcomes

Figure 3.2: Stage of Development by Logic Model Category Example

Developmental Stage

Program

Planning

Program

Implementation

Program

Maintenance

Example:

Developmental

Stages When

Passing a Policy

Assess environment

and assets

Develop policy

The policy has not

yet been passed

The policy has been

passed but not

implemented

The policy has been

in effect for

less than 1 year

The policy has been

in effect for

1 year or longer

Example:

Questions Based

on Developmental

Stage When

Passing a Policy

Is there public support

for the policy?

What resources

will be needed

for implementation

of the policy?

Is there compliance

with the policy?

Is there continued

or increased public

support for the policy?

Are there major

exemptions or loopholes

to the policy?

What is the

health impact

of the policy?

16

|

Developing an Effective Evaluation Plan

2 3 4 5 6

1

Key evaluation questions and needs for information will differ based on the stage of

development of the program. Additionally, the ability to answer key evaluation questions

will differ by stage of development of the program and stakeholders need to be aware

of what the evaluation can and cannot answer. For the above policy program example,

planning stage type questions might include:

Is there public support for the policy?

What resources will be needed for implementation of the policy?

Implementation stage questions might include:

Is there compliance with the policy?

Is there continued or increased public support for the policy?

Are there major exemptions or loopholes to the policy?

Maintenance stage questions might include:

What is the economic impact of the policy?

What is the health impact of the policy?

For more on stage of development and Smoke-Free Policies, please see the Evaluation

Toolkit for Smoke-Free Policies at

http://www.cdc.gov/tobacco/basic_information/

secondhand_smoke/evaluation_toolkit/index.htm

.

A Program Stage of Development exercise is included in Part II, Section 2.1.

17

1

3 4 5 6

2

EVALUATION PLAN TIPS FOR STEP 2

A program description will facilitate a shared understanding of the program

between the program staff and the evaluation workgroup.

The description section often includes a logic model to visually show the link

between activities and intended outcomes.

The logic model should identify available resources (inputs), what the program is

doing (activities), and what you hope to achieve (outcomes).

A quality program evaluation is most effective when part of a larger conceptual

model of a program and its development.

EVALUATION TOOLS AND RESOURCES FOR STEP 2:

2.1 Program Stage of Development Exercise

Evaluation Toolkit for Smoke-Free Policies at http://www.cdc.gov/tobacco/

basic_information/secondhand_smoke/evaluation_toolkit/index.htm

AT THIS POINT IN YOUR PLAN, YOU HAVE—

identified the primary users of the evaluation,

created the evaluation stakeholder workgroup,

defined the purposes of the evaluation,

described the program, including context,

created a shared understanding of the program, and

identified the stage of development of the program.

18

|

Developing an Effective Evaluation Plan

2 3 4 5 6

1

Step 3: Focus the Evaluation

The amount of information you can gather concerning your program is potentially limitless.

Evaluations, however, are always restricted by the number of questions that can be

realistically asked and answered with quality, the methods that can be employed, the

feasibility of data collection, and the available resources. These are the issues at the

heart of Step 3 in the CDC framework: focusing the evaluation. The scope and depth of

any program evaluation is dependent on program and stakeholder priorities; available

resources, including financial resources; staff and contractor availability; and amount of

time committed to the evaluation. The program staff should work together with the ESW

to determine the priority and feasibility of these questions and identify the uses of results

before designing the evaluation plan. In this part of the plan, you will apply the purposes

of the evaluation, its uses, and the program description to narrow the evaluation questions

and focus the evaluation for program improvement and decision making. In this step, you

may begin to notice the iterative process of developing the evaluation plan as you revisit

aspects of Step 1 and Step 2 to inform decisions to be made in Step 3.

Useful evaluations are not about special research interests or what is easiest to implement

but what information will be used by the program, stakeholders (including funders), and

decision makers to improve the program and make decisions. Establishing the focus of the

evaluation began with the identification of the primary purposes and the primary intended

users of the evaluation. This process was further solidified through the selection of the ESW.

Developing the purposeful intention to use evaluation information and not just produce

another evaluation report starts at the very beginning with program planning and your

evaluation plan. You need to garner stakeholder interests and prepare them for evaluation

use. This step facilitates conceptualizing what the evaluation can and cannot deliver.

It is important to collaboratively focus the evaluation design with your ESW based on the

identified purposes, program context, logic model, and stage of development. Additionally,

issues of priority, feasibility, and efficiency need to be discussed with the ESW and those

responsible for the implementation of the evaluation. Transparency is particularly important

in this step. Stakeholders and users of the evaluation will need to understand why some

questions were identified as high priorities while others were rejected or delayed.

A Focus the Evaluation exercise is located in Part II, Section 3.1 of this workbook.

19

1

2 4 5 6

3

Developing Evaluation Questions

In this step, it is important to solicit evaluation questions from your various stakeholder

groups based on the stated purposes of the evaluation. The questions should then

be considered through the lens of the logic model/program description and stage of

development of the program. Evaluation questions should be checked against the logic

model and changes may be made to either the questions or the logic model, thus reinforcing

the iterative nature of the evaluation planning process. The stage of development discussed

in the previous chapter will facilitate narrowing the evaluation questions even further. It is

important to remember that a program may experience characteristics of several stages

simultaneously once past the initial planning stage. You may want to ask yourself this

question: How long has your program been in existence? If your program is in the planning

stage, it is unlikely that measuring distal outcomes will be useful for informing program

decision making. However, in a multi-year evaluation plan, you may begin to plan for and

develop the appropriate surveillance and evaluation systems and baseline information

needed to measure these distal outcomes (to be conducted in the final initiative year) as

early as year 1. In another scenario, you may have a coalition that has been established for

10 years and is in maintenance stage. However, contextual changes may require you to

rethink the programmatic approach being taken. In this situation, you may want to do an

evaluation that looks at both planning stage questions (“Are the right folks at the table?” and

“Are they really engaged?”), as well as maintenance stage questions (“Are we having the

intended programmatic impact?”). Questions can be further prioritized based on the ESW

and program information needs as well as feasibility and efficiency issues.

Often if a funder requires an evaluation plan, you might notice text like this:

Submit with application a comprehensive written evaluation plan that includes activities

for both process and outcome measures.

Distinguishing between process and outcome evaluation can be similar to considering

the stage of development of your program against your program logic model. In general,

process evaluation focuses on the first three boxes of the logic model: inputs, activities,

and outputs (CDC, 2008). This discussion with your ESW can further facilitate the focus of

your evaluation.

20

|

Developing an Effective Evaluation Plan

2 3 4 5 6

1

Process Evaluation Focus

Process evaluation enables you to describe and assess your

program’s activities and to link your progress to outcomes. This

is important because the link between outputs and short-term

outcomes remains an empirical question.

(CDC, 2008)

Outcome evaluation, as the term implies, focuses on the

last three outcome boxes of the logic model: short-term,

intermediate, and long-term outcomes.

Outcome Evaluation Focus

Outcome evaluation allows researchers to document health

and behavioral outcomes and identify linkages between an

intervention and quantifiable effects.

(CDC, 2008)

As a program can experience the characteristics of several

stages of development at once, so, too, a single evaluation

plan can and should include both process and outcome

evaluation questions at the same time. Excluding process

evaluation questions in favor of outcome evaluation questions

often eliminates the understanding of the foundation that supports outcomes.

As you and the ESW take ownership of the evaluation, you will find that honing the

evaluation focus will likely solidify interest in the evaluation. Selection of final evaluation

questions should balance what is most useful to achieving your program’s information

needs while also meeting your stakeholders’ information needs. Having stakeholders

participate in the selection of questions increases the likelihood of their securing evaluation

Process and

Outcome Evaluation

in Harmony in the

Evaluation Plan

As the program

can experience the

characteristics of

several stages of

development at once,

so, too, a single

evaluation plan can and

often does include both

process and outcome

evaluation questions.

Excluding process

evaluation questions

in favor of outcome

evaluation questions

often eliminates the

understanding of

the foundation that

supports outcomes.

Additional resources on

process and outcome

evaluation are identified

in the Resource Section

of this workbook.

21

1

2 4 5 6

3

resources, providing access to data, and using the results. This process increases

“personal ownership” of the evaluation by the ESW. However, given that resources are

limited, the evaluation cannot answer all potential questions.

The ultimate goal is to focus the evaluation design such that it reflects the program stage

of development, selected purpose of the evaluation, uses, and questions to be answered.

Transparency related to the selection of evaluation questions is critical to stakeholder

acceptance of evaluation results and possibly even for continued support of the program.

Even with an established multi-year plan, Step 3 should be revisited with your ESW

annually (or more often if needed) to determine if priorities and feasibility issues still hold

for the planned evaluation activities. This highlights the dynamic nature of the evaluation

plan. Ideally, your plan should be intentional and strategic by design and generally cover

multiple years for planning purposes. But the plan is not set in stone. It should also be

flexible and adaptive. It is flexible because resources and priorities change and adaptive

because opportunities and programs change. You may have a new funding opportunity

and a short-term program added to your overall program. This may require insertion of a

smaller evaluation plan specific to the newly funded project, but with the overall program

evaluation goals and objectives in mind. Or, resources could be cut for a particular program

requiring a reduction in the evaluation budget. The planned evaluation may have to be

reduced or delayed. Your evaluation plan should be flexible and adaptive to accommodate

these scenarios while still focusing on the evaluation goals and objectives of the program

and the ESW.

Budget and Resources

Discussion of budget and resources (financial and human) that can be allocated to the

evaluation will likely be included in your feasibility discussion. In the Best Practices for

Comprehensive Tobacco Control Programs (2007), it is recommended that at least 10%

of your total program resources be allocated to surveillance and program evaluation.

The questions and subsequent methods selected will have a direct relationship to the

financial resources available, evaluation team member skills, and environmental constraints

(e.g., you might like to do an in-person home interview of the target population, but the

neighborhood is not one that interviewers can visit safely). Stakeholder involvement may

facilitate advocating for the resources needed to implement the evaluation necessary

to answer priority questions. However, sometimes, you might not have the resources

necessary to fund the evaluation questions you would like to answer most. A thorough

22

|

Developing an Effective Evaluation Plan

2 3 4 5 6

1

discussion of feasibility and recognition of real constraints will facilitate a shared

understanding of what the evaluation can and cannot deliver. The process of selecting

the appropriate methods to answer the priority questions and discussing feasibility and

efficiency is iterative. Steps 3, 4, and 5 in planning the evaluation will often be visited

concurrently in a back-and-forth progression until the group comes to consensus.

EVALUATION PLAN TIPS FOR STEP 3

It is not possible or appropriate to evaluate every aspect or specific initiative of a

program every year.

Evaluation focus is context dependent and related to the purposes of the

evaluation, primary users, stage of development, logic model, program priorities,

and feasibility.

Evaluation questions should be checked against the logic model and stage of

development of the program.

The iterative nature of plan development is reinforced in this step.

EVALUATION TOOLS AND RESOURCES FOR STEP 3:

3.1 Focus the Evaluation Exercise

AT THIS POINT IN YOUR PLAN, YOU HAVE—

identified the primary users of the evaluation,

created the evaluation stakeholder workgroup,

defined the purposes of the evaluation,

described the program, including context,

created a shared understanding of the program,

identified the stage of development of the program, and

prioritized evaluation questions and discussed feasibility,

budget, and resource issues.

23

1

2 3 5 6

4

Step 4: Planning for Gathering Credible Evidence

Now that you have solidified the focus of your evaluation and identified the questions to

be answered, it will be necessary to select the appropriate methods that fit the evaluation

questions you have selected. Sometimes the approach to evaluation planning is guided

by a favorite method(s) and the evaluation is forced to fit that method. This could lead

to incomplete or inaccurate answers to evaluation questions.

Ideally, the evaluation questions inform the methods. If you have

followed the steps in the workbook, you have collaboratively

chosen the evaluation questions with your ESW that will provide

you with information that will be used for program improvement

and decision making. This is documented and transparent

in your evaluation plan. Now is the time to select the most

appropriate method to fit the evaluation questions and describe

the selection process in your plan. Additionally, it is prudent to

identify in your plan a timeline and the roles and responsibilities

of those overseeing the evaluation activity implementation

whether it is program or stakeholder staff.

To accomplish this step in your evaluation plan, you will need

to—

keep in mind the purpose, logic model/program

description, stage of development of the program,

evaluation questions, and what the evaluation can and

cannot deliver,

confirm that the method(s) fits the question(s); there

are a multitude of options, including but not limited

to qualitative, quantitative, mixed-methods, multiple

methods, naturalistic inquiry, experimental, quasi-

experimental,

think about what will constitute credible evidence for

stakeholders or users,

identify sources of evidence (e.g., persons, documents,

observations, administrative databases, surveillance systems) and appropriate

methods for obtaining quality (i.e., reliable and valid) data,

identify roles and responsibilities along with timelines to ensure the project remains

on-time and on-track, and

remain flexible and adaptive, and as always, transparent.

Fitting the Method

to the Evaluation

Question(s)

The method or

methods chosen need

to fit the evaluation

question(s) and not be

chosen just because

they are a favored

method or specifically

quantitative or

experimental in nature.

A misfit between

evaluation question

and method can and

often does lead to

incomplete or even

inaccurate information.

The method needs to

be appropriate for the

question in accordance

with the Evaluation

Standards.

24

|

Developing an Effective Evaluation Plan

2 3 5 6

1

4

Choosing the Appropriate Methods

It is at this point that the debate between qualitative and quantitative methods usually

arises. It is not that one method is right and one method is wrong, but which method or

combination of methods will obtain answers to the evaluation questions.

Some options that may point you in the direction of qualitative methods:

You are planning and want to assess what to consider when designing a program or

initiative. You want to identify elements that are likely to be effective.

You are looking for feedback while a program or initiative is in its early stages and

want to implement a process evaluation. You want to understand approaches to

enhance the likelihood that an initiative (e.g., policy or environmental change) will be

adopted.

Something isn’t working as expected and you need to know why. You need to

understand the facilitators and barriers to implementation of a particular initiative.

You want to truly understand how a program is implemented on the ground and

need to develop a model or theory of the program or initiative.

Some options that may point you in the direction of quantitative methods:

You are looking to identify current and future movement or trends of a particular

phenomenon or initiative.

You want to consider standardized outcome across programs. You need to monitor

outputs and outcomes of an initiative. You want to document the impact of a

particular initiative.

You want to know the costs associated with the implementation of a particular

intervention.

You want to understand what standardized outcomes are connected with a

particular initiative and need to develop a model or theory of the program or initiative.

Or the most appropriate method may be a mixed methods approach wherein the qualitative

data provide value, understanding, and application to the quantitative data. It is beyond

the scope of this workbook to address the full process of deciding what method(s) are

most appropriate for which types of evaluations questions. The question is not whether to

apply qualitative or quantitative methods but what method is most appropriate to answer

the evaluation question chosen. Additional resources on this are provided in the resource

section in Part II.

25

1

2 3 5 6

4

Credible Evidence

The evidence you gather to support the

answers to your evaluation questions

should be seen as credible by the

primary users of the evaluation. The

determination of what is credible is

often context dependent and can vary

across programs and stakeholders. This

determination is naturally tied to the

evaluation design, implementation, and

standards adhered to for data collection,

analysis, and interpretation. Best

practices for your program area and the

evaluation standards included in the CDC

Framework (Utility, Feasibility, Propriety,

and Accuracy) and espoused by the

American Evaluation Association (http://www.eval.org) will facilitate this discussion with your

ESW. This discussion allows for stakeholder input as to what methods are most appropriate

given the questions and context of your evaluation. As with all the steps, transparency

is important to the credibility discussion as well as the documentation of limitation of

the evaluation methods or design. This facilitates the likelihood that results will be more

acceptable to stakeholders and strengthens the value of the evaluation and likelihood

the information will be used for program improvement and decision making. The value of

stakeholder inclusion throughout the development of your evaluation plan is prominent in

Step 4. More information on the standards can be found in the resources section.

Measurement

If the method selected includes indicators and/or performance measures, the discussion

of what measures to include is critical and often lengthy. This discussion is naturally tied to

the data credibility conversation, and there is often a wide range of possible indicators or

performance measures that can be selected for any one evaluation question. You will want

to consult best practices publications for your program area and even other programs in

neighboring states or locales. The expertise that your ESW brings to the table can facilitate

this discussion. The exact selection of indicators or performance measures is beyond the

scope of this workbook. Resource information is included in Part II of this workbook, such

as the Key Outcome Indicators for Evaluating Comprehensive Tobacco Programs guide.

CDC’s Framework for Program Evaluation

26

|

Developing an Effective Evaluation Plan

2 3 5 6

1

4

Data Sources and Methods

As emphasized already, it is important to select the method(s) most appropriate to answer

the evaluation question. The types of data needed should be reviewed and considered for

credibility and feasibility. Based on the methods chosen, you may need a variety of input,

such as case studies, interviews, naturalistic inquiry, focus groups, standardized indicators,

and surveys. You may need to consider multiple data sources and the triangulation of data

for reliability and validity of your information. Data may come from existing sources (e.g.,

Behavioral Risk Factor Surveillance System, Youth Risk Behavior Surveillance, Pregnancy

Risk Assessment Monitoring System) or gathered from program-specific sources (either

existing or new). You most likely will need to consider the establishment of surveillance and

evaluation systems for continuity and the ability to successfully conduct useful evaluations.

The form of the data (either quantitative or qualitative) and specifics of how these data will

be collected must be defined, agreed upon as credible, and transparent. There are strengths

and limitations to any approach, and they should be considered carefully with the help of

your ESW. For example, the use of existing data sources may help reduce costs, maximize

the use of existing information, and facilitate comparability with other programs, but may

not provide program specificity. Additionally, existing sources of data may not meet the

question-method appropriateness criteria.

It is beyond the scope of this workbook to discuss in detail the complexities of what

appropriate method(s) or data sources to choose. It is important to remember that not

all methods fit all evaluation questions and often a mixed-methods approach is the best

option for a comprehensive answer to a particular evaluation question. This is often where

you need to consult with your evaluation experts for direction on matching method to

question. More information can be found through the resources listed in Part II. Note that

all data collected needs to have a clear link to the associated evaluation question and

anticipated use to reduce unnecessary burden on the respondent and stakeholders. It is

important to revisit data collection efforts over the course of a multi-year evaluation plan to

examine utility against the burden on respondents and stakeholders. Finally, this word of

caution—it is not enough to have defined measures. Quality assurance procedures must be

put into place so that data is collected in a reliable way, coded and entered correctly, and

checked for accuracy. A quality assurance plan should be included in your evaluation plan.

27

1

2 3 5 6

4

Roles and Responsibilities

Writing an evaluation plan will not ensure that the evaluation is implemented on time, as

intended, or within budget. A critical piece of the evaluation plan is to identify the roles and

responsibilities of program staff, evaluation staff, contractors, and stakeholders from the

beginning of the planning process. This information should be kept up to date throughout

the implementation of the evaluation. Stakeholders must clearly understand their role in

the evaluation implementation. Maintaining an involved, engaged network of stakeholders

throughout the development and implementation of the plan will increase the likelihood that

their participation serves the needs of the evaluation. An evaluation implementation work

plan is as critical to the success of the evaluation as a program work plan is to the success

of the program. This is even more salient when multiple organizations are involved and/or

multiple evaluation activities occur simultaneously.

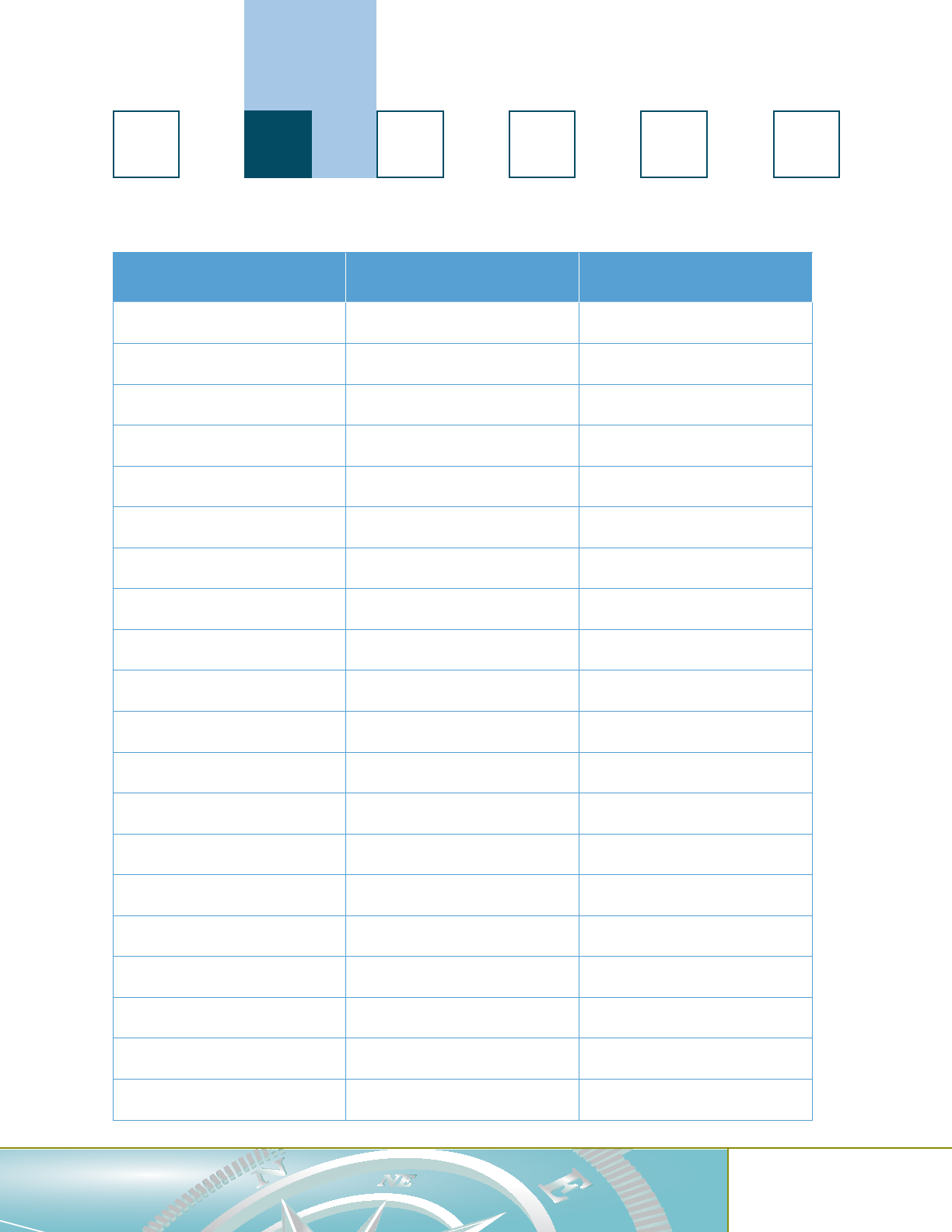

Evaluation Plan Methods Grid

One tool that is particularly useful in your evaluation plan is an evaluation plan methods

grid. Not only is this tool helpful to align evaluation questions with methods, indicators,

performance measures, data sources, roles, and responsibilities but it can facilitate a

shared understanding of the overall evaluation plan with stakeholders. The tool can take

many forms and should be adapted to fit your specific evaluation and context.

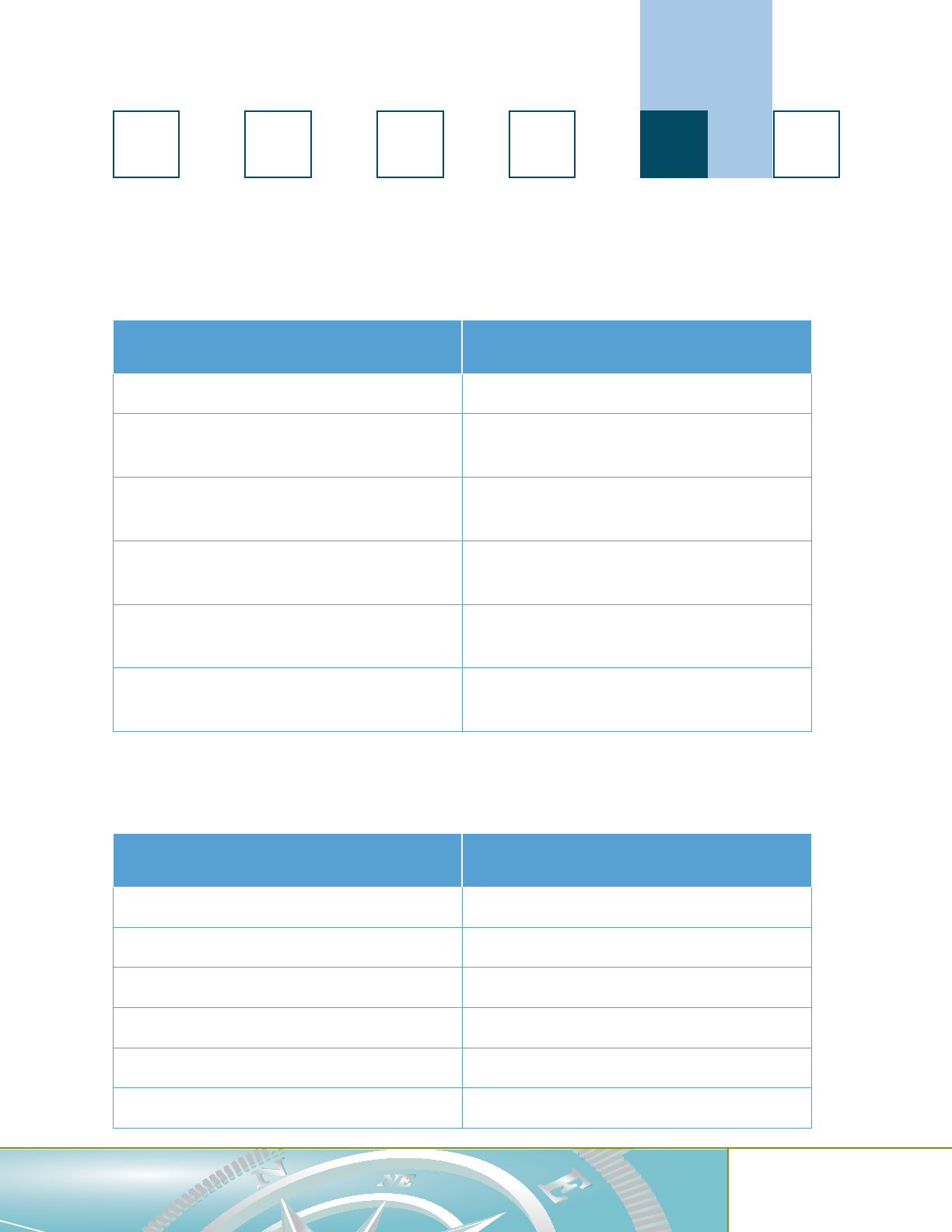

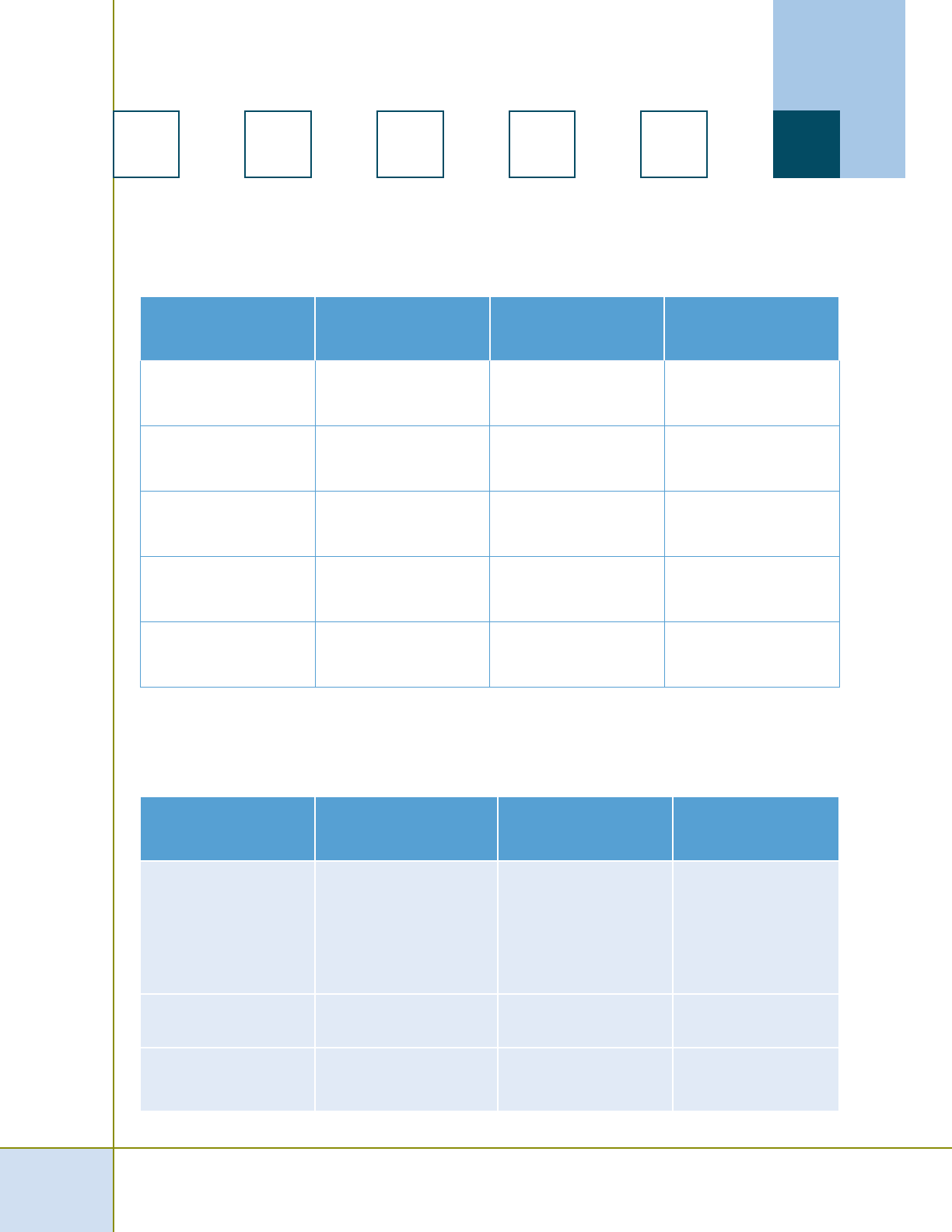

Figure 4.1: Evaluation Plan Methods Grid Example

Evaluation

Question

Indicator/

Performance

Measure

Method Data Source Frequency Responsibility

What process

leads to

implementation

of policy?

Interview

description of

process steps,

actions, and

strategies

Case study,

interviews,

document

reviews etc.

Site visits and

reports

Pre and post

funding period

Contractor

28

|

Developing an Effective Evaluation Plan

2 3 5 6

1

4

Figure 4.2: Evaluation Plan Methods Grid Example

Evaluation Question Indicators/ Performance

Measure

Potential Data Source

(Existing/New)

Comments

What media promotion

activities are being

implemented?

Description of promotional

activities and their reach

of targeted populations,

dose, intensity

Focus group feedback

Target Rating Point and

Gross Rating Point data

sources

Enrollment data

Budget

The evaluation budget discussion was most likely initially started during Step 3 when the

team was discussing the focus of the evaluation and feasibility issues. It is now time to

develop a complete evaluation project budget based on the decisions made about the

evaluation questions, methods, roles, and responsibilities of stakeholders. A complete

budget is necessary to ensure that the evaluation project is fully funded and can deliver

upon promises.

An Evaluation Plan Methods Grid exercise and more examples can be found in Part II,

Section 4.1.

An Evaluation Budget exercise and more examples can be found in Part II, Section 4.2.

29

1

2 3 5 6

4

EVALUATION PLAN TIPS FOR STEP 4

Select the best method(s) that answers the evaluation question. This can often

involve a mixed-methods approach.

Gather the evidence that is seen as credible by the primary users of the

evaluation.

Define implementation roles and responsibilities for program staff, evaluation

staff, contractors, and stakeholders.

Develop an evaluation plan methods grid to facilitate a shared understanding of

the overall evaluation plan, and the timeline for evaluation activities.

EVALUATION TOOLS AND RESOURCES FOR STEP 4:

4.1 Evaluation Plan Methods Grid Exercise

4.2 Evaluation Budget Exercise

AT THIS POINT IN YOUR PLAN, YOU HAVE—

identified the primary users of the evaluation,

created the evaluation stakeholder workgroup,

defined the purposes of the evaluation,

described the program, including context,

created a shared understanding of the program,

identified the stage of development of the program,

prioritized evaluation questions and discussed feasibility

issues,

discussed issues related to credibility of data sources,

identified indicators and/or performance measures linked to

chosen evaluation questions,

determined implementation roles and responsibilities

for program staff, evaluation staff, contractors, and

stakeholders, and

developed an evaluation plan methods grid.

30

|

Developing an Effective Evaluation Plan

2 3 4 6

1

5

Step 5: Planning for Conclusions

Justifying conclusions includes analyzing the information you collect, interpreting, and

drawing conclusions from your data. This step is needed to turn the data collected into

meaningful, useful, and accessible information. This is often when programs incorrectly

assume they no longer need the ESW integrally involved in decision making and instead

look to the “experts” to complete the analyses and interpretation. However, engaging the

ESW in this step is critical to ensuring the meaningfulness, credibility, and acceptance of

evaluation findings and conclusions. Actively meeting with stakeholders and discussing

preliminary findings helps to guide the interpretation phase. In fact, stakeholders often have

novel insights or perspectives to guide interpretation that evaluation staff may not have,

leading to more thoughtful conclusions.

Planning for analysis and interpretation is directly tied to the timetable begun in Step 4.

Errors or omissions in planning this step can create serious delays in the final evaluation

report and may result in missed opportunities if the report has been timed to correspond

with significant events. Often, groups fail to appreciate the resources, time, and expertise

required to clean and analyze data. This applies to both qualitative and quantitative data.

Some programs focus their efforts on collecting data, but never fully appreciate the

time it takes to work with the data to prepare for analysis, interpretation, feedback, and

conclusions. These programs are suffering from “D.R.I.P.”, that is, programs that are “Data

Rich but Information Poor.” Survey data remains “in boxes” or interviews are never fully

explored for theme identification.

After planning for the analysis of the data, you have to prepare to examine the results

to determine what the data actually say about your program. These results should be

interpreted with the goals of your program in mind, the social/political context of the

program, and the needs of the stakeholders.

Moreover, it is critical that your plans include time for interpretation and review by

stakeholders to increase transparency and validity of your process and conclusions.

The emphasis here is on justifying conclusions, not just analyzing data. This is a step

that deserves due diligence in the planning process. The propriety standard plays a role

in guiding the evaluator’s decisions on how to analyze and interpret data to assure that

all stakeholder values are respected in the process of drawing conclusions (Program

Evaluation Standards, 1994). That is to say, who needs to be involved in the evaluation for

it to be ethical. This may include one or more stakeholder interpretation meetings to review

interim data and further refine conclusions. A note of caution, as a stakeholder driven

process, there is often pressure to reach beyond the evidence when drawing conclusions.

31

1

2 3 4 6

5

It is the responsibility of the evaluator and the ESW to ensure that conclusions are drawn

directly from the evidence. This is a topic that should be discussed with the ESW in the

planning stages along with reliability and validity issues and possible sources of biases.

If possible and appropriate, triangulation of data should be considered and remedies to

threats to the credibility of the data should be addressed as early as possible.