U.S. Department of Health & Human Services | National Institutes of Health

Implementation

Science

at a Glance

A Guide for Cancer Control Practitioners

Implementation Science at a Glance

i

Foreword

Since the National Cancer Institute (NCI) Implementation Science Team was formed within the

Division of Cancer Control and Population Sciences some fteen years ago, we have seen the

importance of improving the resources needed to support implementation of evidence-based

cancer control interventions. While the team also focuses on efforts to advance the science

of implementation, and considers the need to integrate implementation science within the

broader eld of cancer control and population sciences, we know that unless we support the

adoption, implementation, and sustainment of research-tested interventions in community

and clinical settings, we will not move very far in reducing the burden of cancer. To that end,

we recognize that the advances in our understanding of implementation processes in recent

years will have greater benet if communicated in a way that supports and informs the

important work of cancer control practitioners.

This resource, Implementation Science at a Glance, is intended to help practitioners and policy

makers gain familiarity with the building blocks of implementation science. Developed by

our team and informed by our ongoing collaborations with practitioners and policy makers,

Implementation Science at a Glance introduces core implementation science concepts, tools,

and resources, packaged in a way that maps to the various stages that practitioners may

nd themselves in as they seek to use evidence-based interventions to meet the needs of

patients, families, and communities. This resource also includes several case examples of

how cancer control organizations have gone through the process of exploring evidence-

based interventions, preparing for their integration into varied practice settings, actively

implementing them, and evaluating their impact over time.

While we know that the volume of implementation science topics can ll many books, we

hope that this resource provides an initial set of valuable and digestible information, along

with suggested resources for those interested in learning more. In addition, we hope that

this resource can continue to be rened over time, and that you share your experiences in

applying this to the betterment of your communities and key constituents. Thank you for all

your efforts to address cancer control needs, and thanks in advance for your guidance as we

improve the impact of our research.

David A. Chambers, DPhil

Deputy Director for Implementation Science

Division of Cancer Control and Population Sciences

National Cancer Institute

Implementation Science at a Glance

ii

Acknowledgments

The National Cancer Institute would like to thank the many cancer control practitioners,

implementation scientists, and program partners whose thoughtful comments and robust

contributions were integral to the creation of this resource.

We particularly thank Amy Allen, Stephenie Kennedy-Rea, and Mary Ellen Conn; Ann-Hilary

Heston; Kathryn Braun; and Suzanne Miller Halegoua and Erin Tagai for their contributions to

the case studies.

We are grateful to the NCI Implementation Science Team, particularly Margaret Farrell, Wynne

Norton, and Prajakta Adsul, who provided hours of review and consultation and guided

this resource to its completion. We note with gratitude the work of Dalena Nguyen, whose

attention to detail and commitment to excellence greatly enhanced the resource’s content and

quality, as well.

Implementation Science at a Glance

Implementation Science at a Glance

Contents

i

ii

1

1

1

2

4

5

6

8

10

11

11

16

17

18

19

21

24

25

27

29

30

32

33

33

35

37

39

41

43

45

Foreword

Acknowledgments

Introduction

Who Is This Guide For?

How Do I Use This Guide?

What Is Implementation Science and Why Is It Important?

Assess

Engaging Stakeholders and Partners

Confirming Evidence for an Intervention

Choosing an Intervention

Prepare

Maintaining Fidelity

Adapting an Intervention

Implement

Diffusion of Innovations

Consolidated Framework for Implementation Research

Interactive Systems Framework for Dissemination and Implementation

Implementation Strategies

Evaluate

What to Evaluate

How to Evaluate

Sustainability

Scaling Up

De-Implementing

Case Studies

West Virginia Program to Increase Colorectal Cancer Screening

Kakui Ahi (Light the Way): Patient Navigation

Tailored Communication for Cervical Cancer Risk

LIVESTRONG

®

at the YMCA

Implementation Resources for Practitioners

Glossary of Terms

References

Implementation Science at a Glance

1

Introduction

Implementation Science at a Glance details how greater use of implementation science

methods, models, and approaches can improve cancer control practice.

While many effective interventions can reduce cancer risk, incidence, and death, as well as

enhance quality of life, they are of no benet if they cannot be delivered to those in need.

Implementation strategies are essential to improve public health. In the face of increasingly

dynamic and resource-constrained conditions, implementation science plays a critical role in

delivering cancer control practices.

Implementation Science at a Glance provides a single, concise summary of key theories, methods,

and considerations that support the adoption of evidence-based cancer control interventions.

Who Is This Guide For?

We wrote this guide for cancer control practitioners who seek an overview of implementation

science that is neither supercial nor overwhelming.

Implementation science is a rapidly advancing eld. Researchers from many disciplines are

studying and evaluating how evidence-based guidelines, interventions, and programs are put

into practice.

How Do I Use This Guide?

Implementation Science at a Glance offers a systematic approach to implement your evidence-

based, public health program, regardless of where you are in your implementation process.

We organized this guide into a four-stage framework: assess, prepare, implement, and

evaluate. Each stage poses important questions for practical considerations.

Look for , which links to a list of additional resources at the

end of this workbook.

While this guide is organized into four distinct stages, these components blend and overlap

in practice. We have also included four case studies to illustrate how implementation science

plays out in real-world settings.

ADDITIONAL RESOURCES

Implementation Science at a Glance

2

PREPARE

» Adaptations

» Fidelity

ASSESS

» Evidence-Based Interventions

» Stakeholder Engagement and

Partnerships

IMPLEMENT

» Theories

» Models

» Frameworks

» Implementation Strategies

EVALUATE

» Sustainability

» Scale-Up

» De-Implementation

» Return on Investment

What Is Implementation Science and

Why Is It Important?

Implementation science is the study of methods to promote the adoption and integration

of evidence-based practices, interventions, and policies into routine health care and public

health settings to improve the impact on population health.

1

Implementation science examines how evidence-based programs work in the real world. By

using implementation science and implementation strategies, you can help bridge the divide

between research and practice—and bring programs that work to communities in need.

Applying implementation science may help you understand how to best use specic strategies

that have been shown to work in your (or similar) settings.

By applying implementation science frameworks and models, you may:

» Reduce program costs

» Improve health outcomes

» Decrease health disparities in your community

Implementation Science at a Glance

3

Implement

Implementation Science at a Glance

4

Implement

Assess

Implementation Science at a Glance

5

Assess

Engaging Stakeholders and Partners

People and place matter. Therefore, it is

important to seek out stakeholder input

throughout your process of preparation and

implementation. Consider partnering with

researchers to advance your goals. Creating

meaningful partnerships will help you:

» Better understand your community and

its strengths and weaknesses, assets,

values, culture, traditions, leaders, and

feelings on change

» Increase the likelihood that

your intervention will be adopted

and sustained

» Ensure that your intervention is

relevant to stakeholders

» Enhance the quality and practicality

of your efforts

» Disseminate your evaluation ndings

» Create relationships with academic centers

that can help sustain your program

Creating and leveraging partnerships with

researchers and academic programs can

empower communities and create social

change. Successful research and community

collaboration can be particularly effective in:

» Fostering a willingness to learn from

one another

» Building community members’

involvement in research

» Beneting all partners with

research outcomes

» Increasing buy-in for your program

What You Can Do:

Engage Stakeholders

Stakeholders are people, communities,

and organizations that could be

affected by a situation. While internal

stakeholders participate through

coordinating, funding, and supporting

implementation efforts, external

stakeholders contribute views and

experiences in addressing the issues

important to them as patients,

participants, and members of the

community.

2,3

Ask these questions to identify key

stakeholders:

» Who will be affected by what we are

doing or proposing?

» Who are the relevant ocials?

» What are the relevant organizations?

» Who has been involved in similar

situations in the past?

» Who or what is frequently

associated with relevant topic areas?

Measuring and assessing outcomes

important to stakeholders can have

a signicant impact on the adoption,

implementation, and sustainment of

evidence-based practices.

Remember that stakeholder

engagement is necessary throughout

the entire implementation process.

These questions can help guide you as

you move through the assess, prepare,

implement, and evaluate stages.

Implementation Science at a Glance

6

Assess

Key Questions

ASSESS

» Does your selected

evidence-based

intervention take

stakeholders’ goals and

needs into account?

PREPARE

» How will stakeholder

engagement help

achieve program

objectives?

» Does your plan

include stakeholder

engagement on key

decisions?

» Can your team engage

stakeholders effectively,

or will you need to hire

experts?

IMPLEMENT

» Did you identify and

communicate clear roles

for all stakeholders?

» Did you include

resources for

stakeholder

engagement in your

implementation plan?

» Are you planning to

include stakeholder

engagement as you

monitor, review,

and evaluate your

implementation?

EVALUATE

» How will you adjust your

implementation plan in

response to stakeholder

feedback?

» What outcomes are

most important to each

group of stakeholders

and each partner?

Confirming Evidence for an Intervention

Cancer control practitioners make decisions based on various types of evidence: from more

subjective evidence—such as their direct experience with the populations they work with—to

more objective sources of evidence—including the results of well-designed research studies.

In this resource, we use the terms “interventions,” “practices,” and “programs”

interchangeably. We generally consider an intervention to be a combination of program

components, while a program often groups several interventions together.

An evidence-based intervention is a health-focused intervention, practice, policy, or guideline

with evidence demonstrating its ability to change a health-related behavior or outcome.

4

Using evidence-based interventions can not only increase your effectiveness but also help

save time and resources.

The less robust the body of evidence supporting a program’s effectiveness, the more

important it is to evaluate the program and share your results.

Implementation Science at a Glance

7

Assess

SYSTEMATIC REVIEWS

Summaries of a body of evidence

made up of multiple studies and

recommendations

RESEARCH STUDIES

Individual studies that test a

specic intervention

PRACTITIONER REPORTS

Reports, briefs, or evaluations of a

strategy in practice

EXPERT OPINION/

PERSONAL EXPERIENCE

Recommendations made by credible

groups or individuals that have not

yet been tested

SUBJECTIVE

OBJECTIVE

Figure 1. A continuum of evidence to support interventions

5

What You Can Do:

Make Sure the Intervention Is

Evidence-Based

How will you know if an intervention

is evidence-based? A quick internet

search may suggest a wide variety of

interventions, which may or may not

be evidence-based. It is important to

evaluate these potential sources.

Evaluate existing information on the

intervention, and consider:

4

» Who created the information?

» What types of interventions are

highlighted?

» What methods were used to review

the evidence?

» What criteria were applied to assess

an intervention?

» How current is the evidence?

» Are resources available to help you

implement the intervention?

The following resources may provide

evidence to support your intervention:

» United States Preventive Services

Task Force

» NCI Research-Tested Intervention

Programs

» Healthy People Tools and Resources

(Healthy People 2020)

» Pew-MacArthur Results First

Initiative

» The Guide to Community Preventive

Services (The Community Guide)

Implementation Science at a Glance

8

Assess

Choosing an Intervention

While it is important for interventions to be grounded in research and evidence, your program

will only be effective if it “ts” your community population and your resources.

When choosing an evidence-based intervention to implement, consider the following:

6

» Does this intervention t our community’s demographics, needs, values, and risk factors?

» Different interventions will take different amounts of money, labor, and time.

– Does our organization have the capacity and resources this intervention requires?

– Do we have the expertise to implement this intervention?

– Can we engage partners or leverage other resources?

» Does this intervention target our overall goal?

After selecting your intervention, be wary about recommending adaptations without specic

guidance, such as from the original developers. Adapting some aspects of an intervention can

lead to a “voltage drop”: a change in expected outcome when an intervention moves from a

research setting into a real-world context.

The following sections can help guide you as you decide to implement an intervention as-is, if

it is a good t, or to rst adapt it to your local community.

DECISION

MAKING

Population

characteristics, needs,

values, and preferences

Resources, including

practitioner expertise

Best available

research evidence

Figure 2. Components to consider when selecting an intervention

7

ADDITIONAL RESOURCES

Implementation Science at a Glance

9

Implement

Implementation Science at a Glance

10

Implement

Prepare

Implementation Science at a Glance

11

Prepare

Maintaining Fidelity

Fidelity refers to the degree to which an evidence-based intervention is implemented

without compromising the core components essential for the program’s effectiveness.

8,9

Lack of delity to the original design or intent makes it dicult to know which version of the

intervention was implemented and, therefore, what exactly caused the outcomes.

Why is delity important?

One of the most common reasons that practitioners do not get the results they anticipate is

that they have not properly implemented the practice or program. To avoid this problem, and

to get better results, you must understand the importance of implementing the practice or

program as intended.

When interventions implemented with delity are compared to those not implemented with

delity, the difference in effectiveness can be profound. Those implemented with delity will

have a greater impact on outcomes than those implemented without delity.

10

Adapting an Intervention

Evidence-based interventions are not one size ts all. You may have to adapt them to better t

the population or local conditions.

12

Adaptations may involve the addition, deletion, expansion,

reduction, or substitution of various intervention components.

8,12,13

Core components to adapt

to may include the setting, target audience, delivery, or culture.

14

Adapting an intervention can help improve health equity. For example, organizations that

serve communities with limited economic resources, such as local health departments

or safety-net health centers, may adapt some parts of the intervention to leverage their

resources while still working toward similar outcomes.

Additionally, sharing information about your adaptations and results can contribute to a

greater understanding of the full range of factors that impact implementation in high-need

and under-resourced areas.

There are many areas in which changes to the original intervention can take place. See Figure 3.

The core components of an intervention relate to its:

11

» Content – the substance, service, information, or other material that the intervention

provides (e.g., screening tests)

» Delivery – how the intervention is implemented (e.g., setting, format, channels, providers)

» Method – how the intervention will affect participants’ behavior or environment

Implementation Science at a Glance

12

Prepare

Before adapting an intervention, consider

the following:

» Are adaptations necessary?

» How important is it to your partners to

adapt this intervention?

» What adaptation would you make?

» Do you have the resources to implement

the adapted intervention?

SERVICE SETTING

TARGET AUDIENCE

MODE OF DELIVERY

CULTURE

CORE COMPONENTS

Figure 3. Sources of intervention adaptation

14

What You Can Do: Balance Fidelity and Adaptations

Making too many changes to

an intervention can reduce

its original effectiveness, or

worse, introduce unintended

and harmful outcomes.

Before making adaptations

to the intervention, you

should think about how

the change to the original

intervention can improve

the t to your community,

setting, or target population,

and at the same time,

maintain delity to the core

components of the original

intervention. Think of

possible adaptations as you

would a green, yellow, or

red trac light: green light

changes are usually OK to

make; yellow light changes

should be approached

with caution; and red light

changes should be avoided

when possible.

12

GREEN LIGHT

CHANGES

YELLOW LIGHT

CHANGES

RED LIGHT

CHANGES

» Usually minor

» Made to increase the reach, receptivity, and

participation of the community

» May include:

– Program names

– Updated and relevant statistics or health

information

– Tailored language, pictures, cultural

indicators, scenarios, and other content

» Typically add or modify intervention components

and contents, rather than deleting them

» May include:

– Substituting activities

– Adding activities

– Changing session sequence

– Shifting or expanding the primary

audience

– Changing the delivery format

– Changing who delivers the program

» Changes to core components of the intervention

» May include:

– Changing a health behavior model or

theory

– Changing a health topic or behavior

– Deleting core components

– Cutting the program timeline

– Cutting the program dosage

Implementation Science at a Glance

13

Prepare

What You Can Do: Use a Systematic Approach to Adaptations

Try this ve-step process when adapting an intervention.

12

The more adaptations you make,

the more you will need to re-evaluate the effectiveness of the intervention.

1

ASSESS FIT

and consider adaptation

ASSESS THE ACCEPTABILITY

and importance of adaptation

2

3

MAKE FINAL DECISIONS

about what and how to adapt

MAKE ADAPTATIONS

4

5

PRETEST AND PILOT TEST

Figure 4. A systematic approach to adapt your intervention

12

Implementation Science at a Glance

15

Implement

Implementation Science at a Glance

16

Implement

Implement

Implementation Science at a Glance

17

Implement

There are multiple theories, models, and frameworks frequently used in implementation science

that can guide you as you plan, implement, and evaluate your intervention. While theories,

models, and frameworks are distinct concepts, this resource uses them interchangeably.

Implementation science models provide guidance for understanding how to address the gap

between identifying an intervention and ensuring its adoption (the research-to-practice gap)

and later sustaining the intervention.

By spending the time to understand these underlying processes, you will be better prepared

to more rapidly move effective programs, practices, or policies into communities.

Models can help you understand the logic of how your implementation effort creates an

impact and offer clear constructs to measure that impact.

Using models can also help you nd problem areas at your setting and help guide the

selection of implementation strategies. Some models include:

» Diffusion of Innovations

» Consolidated Framework for Implementation Research

» Interactive Systems Framework for Dissemination and Implementation

Diffusion of Innovations

Diffusion of Innovations theory refers to the process by which an innovation is communicated

over time through members of a social network.

15

Diffusion consists of four elements:

» The innovation, idea, practice, or object that is intended to be spread

» Communication or the exchange of messages

» A social system, structure, or group of individuals that interact

» A process of dissemination or diffusion that occurs over time

This theory suggests that an innovation, like an evidence-based intervention, will be

successful or adopted by individuals when the innovation is diffused or distributed through

communities.

16

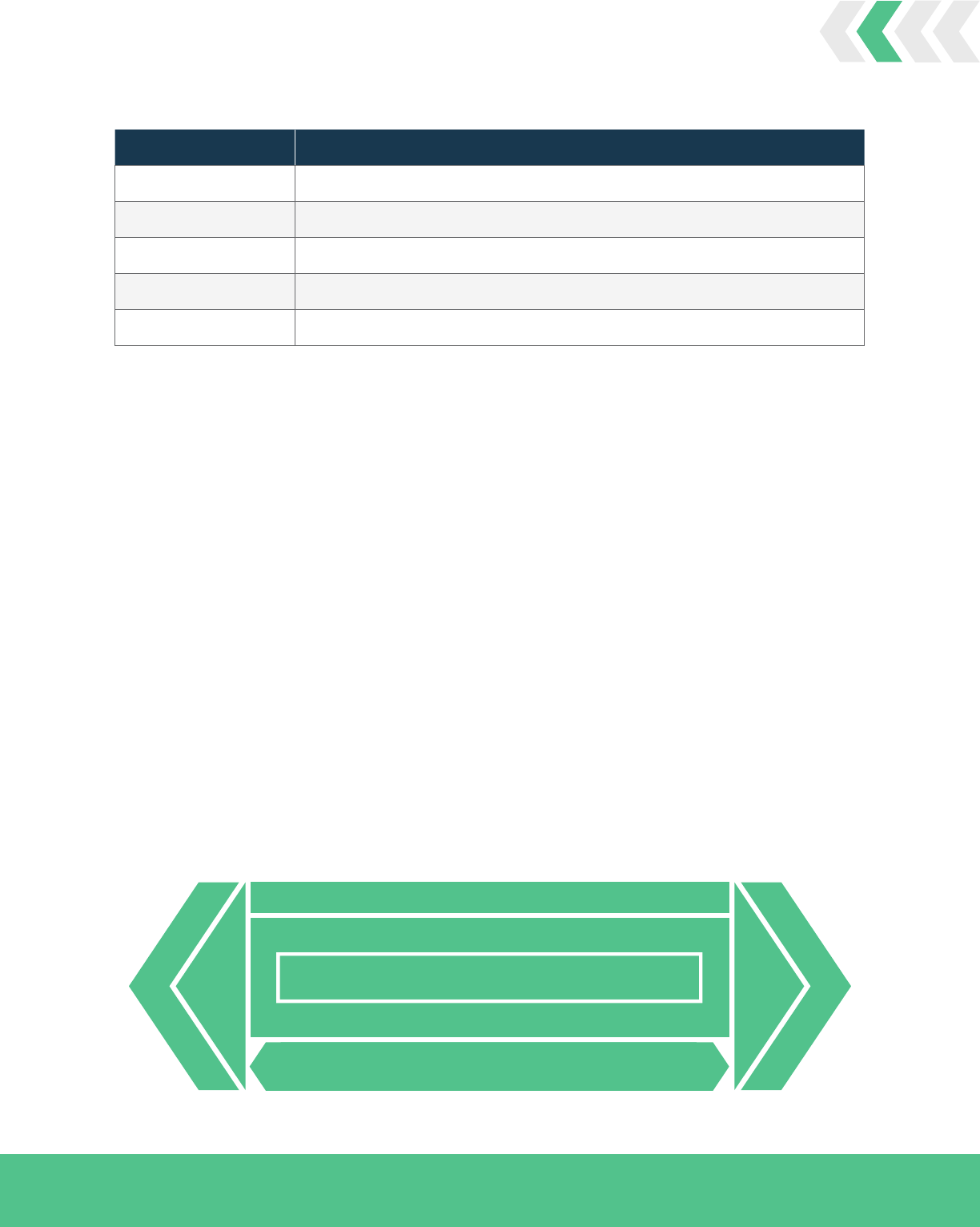

Tables 1 and 2 describe the theory further.

16

Constructs Denition

Innovation An idea, object, or practice that an individual, organization, or community believes is new

Communication channels The means of transmitting the new idea from one person to another

Social systems A group of individuals who together adopt the innovation

Time How long it takes to adopt the innovation

Table 1. Concepts of Diffusion of Innovations

Implementation Science at a Glance

18

Implement

Table 2. Key Attributes Affecting the Speed and Extent of an Innovation’s Diffusion

Constructs De

nition

Relative advantage Is the innovation better than what it will replace?

Compatibility Does the innovation t with the intended audience?

Complexity Is the innovation easy to use?

Testability Can you test the innovation before deciding to adopt?

Observability Are the results of the innovation observable and easily measurable?

Consolidated Framework

for Implementation Research

The Consolidated Framework for

Implementation Research (CFIR) can help

you identify what aspects of your context

you should assess during the planning

process. CFIR has five domains:

17

» Intervention characteristics

» Characteristics of individuals involved

» Inner setting

» Outer setting

» Process

Each CFIR domain provides a menu of

key factors for you to choose from. The

factors have been linked with the effective

implementation of interventions. Examples

include, but are not limited to:

» Intervention characteristics: relative

advantage, complexity, cost

» Characteristics of individuals involved:

self-ecacy, knowledge/beliefs about

intervention

» Inner setting: culture, readiness for

implementation

» Outer setting: external policy and incentives

» Process: planning, champions

OUTER SETTING

INNER SETTING

CHARACTERISTICS OF INDIVIDUALS

PROCESS

INTERVENTION

(UNADAPTED)

INTERVENTION

(ADAPTED)

CORE

COMPONENTS

ADAPTABLE

PERIPHERY

ADAPTABLE

PERIPHERY

CORE

COMPONENTS

17

Figure 5. Consolidated Framework for Implementation Research

Implementation Science at a Glance

19

Implement

Interactive Systems Framework for

Dissemination and Implementation

The Interactive Systems Framework (ISF) for Dissemination and Implementation was

developed to address the “how-to” gap between scientically determining what works and

moving that knowledge into the eld for the benet of the public.

18

Figure 6 shows the ISF and how it connects three systems to work together for successful

dissemination and implementation. The term “system” is used broadly to describe a set of

activities that accomplish one of the three identied functions that make dissemination and

implementation possible. These systems are:

19

SYNTHESIS AND TRANSLATION SYSTEM – Here, scientic knowledge is distilled into

understandable and actionable information. Research institutions, universities, and NCI

are all institutional examples of this system.

SUPPORT SYSTEM – This system supports the work of the other two systems by building

the capacity to carry out prevention activities. Agencies like state health departments or

state cancer control coalitions are often in the role of prevention support for grantees or

local programs.

DELIVERY SYSTEM – This is where innovations are implemented or where “the rubber

meets the road.” Community-based organizations often function in the role of the

prevention delivery system.

As depicted in Figure 6, these three systems work together and are embedded within an

underlying context that inuences decision making and the adoption of interventions. These

underlying conditions include:

» Legislation that supports funding for cancer prevention and control

» The best available theory and research evidence

» The community or organizational context in which interventions are implemented

» Macro-level policy factors such as state or federal budget constraints or legislative changes

These underlying considerations are graphically displayed as the climate in which the three

systems exist, and all of these have an impact on successful dissemination and implementation.

Each system within the ISF also builds upon or inuences the functions of the other two

systems. These relationships and inuences are represented by the arrows that connect the

systems to each other.

The ISF can offer you a non-exhaustive list of practical considerations and strategies to

address each of the three systems involved. These strategies will make up the implementation

effort, leading to the population health and implementation outcomes you seek to change.

19,20

Implementation Science at a Glance

20

Implement

DELIVERY SYSTEM

SUPPORT SYSTEM

SYNTHESIS & TRANSLATION SYSTEM

MOTIVATION

MOTIVATION

GENERAL

CAPACITY

GENERAL

CAPACITY

INNOVATION-

SPECIFIC CAPACITY

INNOVATION-

SPECIFIC CAPACITY

SYNTHESIS TRANSLATION

FUNDING

MACRO-POLICY

CLIMATE

OUTCOMES

Figure 6. The Interactive Systems Framework for Dissemination and Implementation

19

Implementation Science at a Glance

21

Implement

Implementation Strategies

Implementation strategies are the “how-to” components of interventions.

4,21

Think of these as

ways to implement evidence-based practices, programs, and policies.

Implementation strategies are the essential components of implementation science but are

often not adequately described nor labeled properly. A commonly used denition pitches

them as “specic methods or techniques used to enhance the adoption, implementation,

and sustainability of a public health program or practice.”

21

It is important to note that an

implementation strategy focuses on improving implementation outcomes such as acceptability,

adoption, appropriateness, feasibility, costs, delity, penetration, and sustainability.

Recent progress has been made to identify and dene strategies relevant to the health care

context, which resulted in a list of seventy-three distinct strategies.

22

These strategies can be

grouped into eleven categories as shown in Figure 7. This list provides a good starting point to

understand the different types of strategies that have been used and tested previously, and

also facilitates the selection of strategies that might be relevant to your practice context.

CONVENE TEAMS

PRACTICE FACILITATION

PROVIDE INTERACTIVE ASSISTANCE

DEVELOP STAKEHOLDER

INTERRELATIONSHIPS

UTILIZE FINANCIAL STRATEGIES

ENGAGE CONSUMERS

USE EVALUATION PLAN

AND INTERACTIVE STRATEGIES

SUP

PORT PRACTITIONERS

CHA

NGE INFRASTRUCTURE

ADAPT AND TAILOR TO CONTEXT

TRAIN AND EDUCATE

STAKEHOLDERS

Examples of “Train and Educate

Stakeholders” Strategies

» Conduct educational outreach visits

» Use train-the-trainer strategies

» Create a learning collaborative

» Provide ongoing consultation

Figure 7. Implementation strategy categories and examples

23

Implementation Science at a Glance

22

Implement

What You Can Do: Identify Implementation Strategies

To maximize the potential of your implementation efforts, it is important that you select strategies

that t your local context.

Discuss with stakeholders the factors that may inuence how your intervention is implemented.

Their perspectives can provide important insights about the community and other contexts.

Generating a list of these contextual considerations can be an important step to determine which

implementation strategies best t the local context. Table 3 illustrates how, once you have this list,

you may select strategies to address these determinants.

It is rare to use a single strategy during implementation. Selecting multiple strategies to address

multiple barriers to implementing the intervention may be necessary. You may also need to select

different strategies in different phases of implementation.

Methods such as concept mapping and intervention mapping may also help you select relevant

implementation strategies.

24

Identied Factor Implementation Strategy

YOUR DETERMINANT YOUR STRATEGY

Lack of knowledge Interactive education sessions

Beliefs or attitudes Peer inuence or opinion leaders

Community-based services Process redesign

Table 3. Selecting Strategies Based on Inuential Factors

ADDITIONAL RESOURCES

Implementation Science at a Glance

23

Implement

Implementation Science at a Glance

24

Implement

Evaluate

Implementation Science at a Glance

25

Evaluate

What to Evaluate

Is what we’re

doing working?

Why or why not?

How do we show the value

of the work we do?

Evaluation is the systematic collection of information about activities, characteristics, and

re

sults of programs to assess the program and implementation outcomes.

25

Depending on what you and your organization prioritize, you can choose specic outcomes

you want to target, dene what success would look like, and then evaluate your success.

Key Outcomes

There are many outcomes that you can evaluate to assess or determine whether your

implementation efforts were successful.

There are distinct categories of outcomes you can evaluate in implementation science:

» Implementation outcomes

» Program outcomes

» Community outcomes

» Individual outcomes

The differences between these are important. Implementation outcomes assess the effects

of implementation efforts, while community and individual outcomes assess the effects of

the intervention. If your intervention does not achieve community or individual outcomes

as expected, it is important to know whether the failure is due to ineffectiveness of the

intervention in your setting or to ineffective implementation of the intervention. To evaluate

implementation, you must assess implementation outcomes.

As depicted in Table 4, implementation outcomes have three important functions:

26

» Indicators of implementation success

» Proximal indicators of implementation process

» Key intermediate outcomes that impact community- and individual-level outcomes

Table 4. Key Outcomes in Implementation Science

Implementation

O

u

tcomes

Program

Outcomes

Community

Outcomes

Individual

Outcomes

» Acceptability

» Adaptation

» Adoption

» Appropriateness

» Feasibility

» Fidelity

» Maintenance

» Penetration

» Sustainability

» Cost-effectiveness

» Effectiveness

» Equity

» Reach

» Access to care

» Access to fresh

produce

» Built environment

» Disease incidence

» Disease prevalence

» Health disparities

» Immunization and

vaccination

» Walkability

» Longevity

» Physical activity and

tness

» Social connectedness

» Quality of life

Implementation Science at a Glance

26

Evaluate

Measurement Tools

Measurements of some implementation outcomes can be captured by examining attitudes,

opinions, intentions, and behaviors.

26

Additional measures for assessing implementation

outcomes can be found through the Society for Implementation Research Collaboration.

What You Can Do: Choose Outcomes to Measure

Table 5 denes nine implementation outcomes, their most relevant stage during implementation,

and some methods to measure them.

26

Implementation

Outcome

Denition

Implementation

Stage

Ways to Measure

Acceptability

Perception among stakeholders

that the program is agreeable to

the intervention

Early for adoption

Ongoing for penetration

Late for sustainability

Survey interviews

Administrative data

Adoption

Intention among stakeholders to

employ an intervention

Early to mid

Administrative data

Observation interviews

Survey

Appropriateness

Perceived t of the innovation or

intervention for a given setting/

population/problem

Early (prior to adoption)

Survey

Interviews

Focus groups

Effectiveness

Impact of an intervention on

important outcomes

Mid to late

Observation

Interviews

Feasibility

Extent to which the intervention

can be successfully used within a

given setting

Early (during adoption)

Surveys

Administrative data

Fidelity

Degree to which an intervention

was implemented as intended by

the program developers

Early to mid

Observation checklists

Self-reporting

Implementation cost

Cost impact of an

implementation effort

Early for adoption and

feasibility

Mid for penetration

Late for sustainability

Administrative data

Penetration

Integration of an intervention

within a community,

organization, or system

Mid to late

Program audits

Checklists

Sustainability

Extent to which the intervention

is maintained over time

Late

Program audits

Interviews

Checklists

Table 5. Implementation Outcomes

Implementation Science at a Glance

27

Evaluate

How to Evaluate

A number of approaches are available to structure your evaluation and provide a better

understanding of how and why your implementation efforts succeed or fail.

27

While the

following is not an exhaustive list, it provides a summary of evaluation approaches and

frameworks often used by practitioners to address the pragmatic needs of their context.

Logic Models

Logic models are a visual representation of how a program is expected to produce desired

outcomes.

28

A logic model can be used in the development, planning, and evaluation phases

of your program implementation, but for the purposes of this guide, logic models will be used

as an evaluation tool. In using a logic model, you can identify the interrelationships of your

inputs and activities, and how they relate to your desired short-term, mid-term, and long-term

outcomes to be measured.

INPUTS INTERVENTION ACTIVITIES

OUTPUTS &

OUTCOMES

LONG-TERM

OUTCOMES

CONTEXTUAL AND EXTERNAL FACTORS

Figure 8. Simplied logic model

28

Evaluability Assessments

Evaluability assessments are a useful evaluation approach if your program is new or

premature for evaluation.

29,30

These assessments require developing a logic model with all

the stakeholders involved. Evaluability assessments are highly participatory and result in

stakeholders reporting on ve ndings:

» Plausibility

» Areas of program development

» Evaluation feasibility

» Options for further evaluation

» Critique of current data availability

Implementation Science at a Glance

28

Evaluate

Reach, Effectiveness, Adoption, Implementation, Maintenance (RE-AIM) Framework

You can use the RE-AIM framework to inform your planning, evaluation, and reporting, but

it is most often used for implementation evaluation.

31

RE-AIM is an especially useful tool for

practitioners because it was created in response to the need for attention to external validity,

or the extent to which the implemented intervention would be generalizable to other real-

world settings.

32,33

Economic Evaluation

Economic evaluations will be useful to you if you are interested in the affordability of your

implementation efforts in achieving individual and community outcomes.

34

A very expensive

intervention that produces small improvement in outcomes is less appealing than another

intervention that produces the same outcome at a fraction of the cost. Economic evaluations help

you quantify cost-effectiveness and can help justify scaling up the intervention in the future.

Construct Denition

Reach

The number, proportion, and representativeness of individuals who are willing to

participate in an intervention

Effectiveness

The impact of an intervention on important outcomes, including potential negative

effects, quality of life, and economic outcomes

Adoption

The absolute number, proportion, and representation of settings and intervention

agents who are willing to initiate an intervention

Implementation

The intervention agents’ delity to the various components of an intervention’s

protocol (e.g., delivery as intended)

Maintenance

The extent to which an intervention or policy becomes institutionalized or part of

routine practice and policy

Table 6. Dening RE-AIM Constructs

Implementation Science at a Glance

29

Evaluate

Sustainability

Your intervention can only deliver population benets if you are able to sustain your activities

over time. Sustainability describes the extent to which an evidence-based intervention can

continue to be delivered, especially if external support or funding ends.

35

You will only be able to sustain effective implementation efforts if you keep evaluating and

adapting it to your setting and population. Therefore, after you evaluate your efforts, you

should reassess and continue sustaining the implementation.

What You Can Do: Sustain Your Intervention Program

Consider the following eight core domains to increase the intervention’s capacity for

sustainability.

36,37

These domains were developed by practitioners, scientists, and funders

from several public health areas.

You can use the Program Sustainability Assessment Tool to understand factors that inuence

your intervention’s capacity for sustainability and develop an action plan to increase the

likelihood of sustainability. The tool helps identify your organization’s sustainability strengths

and weaknesses and can guide your sustainability planning.

Factors Inuencing Sustainability

FUNDING STABILITY

Establishing a consistent nancial base

for your program

PROGRAM ADAPTATION

Changing your program to ensure its

ongoing effectiveness

POLITICAL SUPPORT

Maintaining relationships with internal

and external stakeholders who support

your program

PROGRAM EVALUATION

Assessing your program to inform

planning and document results

PARTNERSHIPS

Cultivating connections between your

program and its stakeholders

STRATEGIC PLANNING

Using processes that guide your

program’s direction, methods, and goals

ORGANIZATION CAPACITY

Having the internal support and resources

needed to effectively manage your program

and its activities

COMMUNICATIONS

Exchanging information about your

program with stakeholders and the public

Implementation Science at a Glance

30

Evaluate

Scaling Up

If the intervention has been successful in your setting, you or your organization might be

considering “scale-up.” Scaling up is the deliberate effort to increase the impact of successful

interventions so that they can benet more people and foster sustainability.

38

You can scale-

up your implementation effort in three ways, as shown in Figure 9.

Scaling up requires a new examination of your partnerships and resources to decide if there is

evidence to support the adapted intervention.

VERTICAL

Adoption by different jurisdictions for policy-based,

systematic, and structural change

HORIZONTAL

Expansion across the same system levels, such

as departments, organizations, sectors

DEPTH

Addition of new components to an existing innovation

Figure 9. Potential directions for scaling up in population public health

39

Implementation Science at a Glance

31

Evaluate

What You Can Do: Proceed in Increments and Closely Monitor Your Progress

Multiple conditions and external institutions affect the process and prospects for scale-up.

The environment presents many opportunities and obstacles that must be identied and

addressed when deciding how you are going to scale up. You can use these steps and the

scale-up framework to systematically plan and manage the scale-up process:

40

1. Plan actions to increase scalability of your intervention.

2. Increase the user organizations’ capacity to scale up.

3. Assess the conditions of your environment (e.g., policies, bureaucracy, health and other

sectors, socioeconomic and cultural context, people’s needs and rights).

4. Increase the capacity of the resource team and implementers.

5. Make strategic choices appropriate for your scale-up.

THE

INNOVATION

ENVIRONMENT

RESOURCE

TEAM

USER

ORGANIZATIONS

SCALE-UP

STRATEGY

TYPES OF

SCALING UP

DISSEMINATION

& ADVOCACY

ORGANIZATIONAL

CHOICES

COSTS/RESOURCE

MOBILIZATION

MONITORING

& EVALUATION

Figure 10. The ExpandNet/WHO framework for scaling up

Table 7. Considerations for Scale-Up

Strategic Choices Is

sues to Consider When Choosing Strategies

Types of scaling up

» Vertical scaling up – institutionalization through policy, political, legal,

budgetary, or other health systems change

» Horizontal scaling up – expansion, replication

Dissemination and advocacy

» Personal – training, technical assistance, policy dialogue, cultivating

champions and gatekeepers

» Impersonal – websites, publications, policy briefs, toolkits

Organizational process

» Scope of scaling up (the extent of geographic expansion and levels within the

health system)

» Pace of scaling up (gradual or rapid)

» Number of agencies involved

Cost/resource mobilization

» Assessing costs

» Linking scale-up to macro-level funding mechanisms

» Ensuring adequate budgetary allocation

Monitoring and evaluation

» Special indicators to assess the process

» Outcome and impact of scaling-up

» Service statistics

» Local assessments

Implementation Science at a Glance

32

Evaluate

De-Implementing

De-implementation is the process of reducing

or stopping the use of a practice, intervention,

or program. There are many reasons why

a public health agency, organization, or

department may purposely choose to reduce (in

terms of frequency or intensity) the delivery of

a practice to a target population, or choose to

stop offering the practice to a target population

entirely.

Practices that may be appropriate for de-

implementation include those that are:

» Ineffective (e.g., evidence shows the

practice does not work)

» Contradicted (e.g., new and stronger or

more robust evidence shows the practice

doesn’t work)

» Mixed (e.g., some evidence shows that the

practice works but other evidence shows

that it doesn’t work)

» Untested (e.g., programs that have not yet

been evaluated in a research study)

Determining what practice to de-implement

and how quickly is inuenced by many factors,

including how widespread the practice is in use,

what resources are allocated for implementing

the program that might otherwise be spent on

offering effective practices, and the needs of

the target population.

De-implementation also should include multiple

stakeholders, planning, and consideration

of multi-level factors that can inuence the

de-implementation of a practice. In addition,

frameworks, models, and theories that can help

inform and guide the use of strategies facilitate

the de-implementation process. Frameworks

specically focused on de-implementation,

and identication of strategies most effective

for facilitating the de-implementation process,

are of increasing interest among researchers,

practitioners, and policy makers.

What You Can Do:

Follow These Steps for

De-Implementation

1. Identify and prioritize practices

that may be appropriate for

de-implementation.

a. Is your organization offering practices

that are no longer needed by the

community?

b. Is there a more pressing or important

health issue that should be addressed

instead?

2. Gather information on potential

barriers to the de-implementation

process.

a. Will personnel or organizational

changes be needed if the practice is no

longer offered?

b. Will de-implementing the practice

reduce collaborative opportunities with

community partners?

3. Identify strategies that are

needed to overcome the de-

implementation barriers.

a. Will an alternative practice be

introduced to replace the one that

is being removed? What training is

available for the new practice?

b. What communication is needed to

educate the community on why a

practice is no longer being offered?

4. Implement and evaluate strategies

to support de-implementation.

a. Can you identify alternative practices

that could be used to meet the needs

of the community while maintaining

strong community linkages?

b.

Can you allocate resources to another

important issue or public health practice?

ADDITIONAL RESOURCES

Implementation Science at a Glance

33

Case Studies

See how cancer control practitioners use implementation science to

deliver effective interventions in their communities.

West Virginia Program to

Increase Colorectal Cancer Screening

The West Virginia Program to Increase Colorectal Cancer Screening (WV PICCS) was funded

by the Centers for Disease Control and Prevention and directed through the West Virginia

University Cancer Institute. The program aimed to increase colorectal cancer screening rates

in persons aged 50‒75 by partnering with health care systems across West Virginia. The

intervention sought to change protocols within health care systems, such as primary care

practices, to increase referral and completion of colorectal cancer screenings.

Each year, WV PICCS partnered with a different cohort of primary care clinics to help increase

their colorectal cancer screening rates. The program prioritized partner clinics serving areas

with high rates of colorectal cancer mortality and late-stage diagnosis. To date, WV PICCS has

partnered with forty-four clinics around the state.

WV PICCS further partnered with health systems to implement at least two evidence-based

interventions and supportive activities shown to increase colorectal cancer screening. As

part of the WV PICCS project, every clinic undertook a provider assessment and feedback

intervention. Each clinic chose to deliver an additional intervention method they believed

would be the best “t” for both their clinic as well as their patient population.

ASSESS

To help clinics identify and select the second intervention, each health system and WV PICCS

collaboratively assessed the clinic’s capacity, interests, and workow, among other factors. As

a result, clinics could choose to implement enhanced client reminders, provider reminders, or

structural barriers reduction programs to increase the recommendation for and completion of

screening. The most frequently implemented project was the enhanced call reminder program

to encourage patients to complete and return fecal immunochemical tests to the clinic.

PREPARE

WV PICCS staff worked with the health care systems to help tailor the enhanced call reminder

intervention to best t the clinics’ workow and ensured the protocol was adapted to t the

setting. For example, while several clinics opted to have nurses place the reminder calls, in

different clinics, lab technicians or care coordinators made the calls.

Implementation Science at a Glance

34

IMPLEMENT

WV PICCS worked with the clinics over a two-year, two-phase implementation period. WV

PICCS leveraged multiple implementation strategies, such as patient navigation and media

outreach, to enhance the intervention implementation and uptake.

WV PICCS provided technical assistance by a staff member to clinics extensively during the

project’s rst year. Assigning a staff member to provide the assistance for each clinic provided

tailored technical support and monthly facilitation meetings and helped monitor changes to

each clinic’s care delivery system.

In the second year, the technical assistance was reduced to once a month. This tapering

allowed the clinic and WV PICCS to assess the clinic’s capability to sustain the new

interventions over time.

EVALUATE

WV PICCS used the “plan, do, study, act” evaluation cycles to support their implementation

and evaluation efforts. In keeping with their model, during the second year of the initiative,

data collection began to assess clinical capacity to sustain the improved colorectal cancer

screening rate.

LESSONS LEARNED

The call reminders were originally proposed as up to three

calls followed by a letter. Knowing it is important that

interventions t the clinical culture, WV PICCS adapted the

intervention to allow clinics to send reminders via letter rst,

if it better suited their workow. WV PICCS listened to the

clinics when they provided feedback and adjusted the protocol.

As partners,

you need to listen.

Implementation Science at a Glance

35

Kukui Ahi (Light the Way): Patient Navigation

Racial and ethnic disparities affect rates of cancer screening. For example, Asian Americans are less

likely than non-Hispanic Whites to undergo timely cervical and colorectal screening, and Native

Hawaiians are less likely than non-Hispanic Whites to get a mammogram.

41

These disparities may

be due to a combination of health system, provider, and patient factors that decrease access to care

and lower patients’ capacity to advocate for their needs. Interventions that promote accessible

and coordinated care may have the potential to increase screening and reduce delays in time to

diagnosis and treatment after abnormal screenings.

The intervention “Kukui Ahi (Light the Way): Patient Navigation” used lay-patient navigators

from the local community. In coordination with health care providers, the lay-patient

navigators support Medicare recipients through education, coordinating screenings, providing

transportation, assisting with paperwork, and nding ways to pay for care. They aimed to increase

screening rates for colorectal, cervical, breast, and prostate cancers among Asian and Pacic

Islander Medicare beneciaries.

ASSESS

The idea of implementing a patient navigation intervention came from community partners of the

‘Imi Hale Native Hawaiian Cancer Network (‘Imi Hale). The ‘Imi Hale team consequently examined

current patient navigation programs, such as the patient navigation intervention at Harlem Hospital,

and looked for ways in which they could adapt it for the populations of the Hawaiian Islands.

PREPARE

To create a comprehensive training curriculum for the lay-patient navigators implementing the

program, ‘Imi Hale conducted a needs assessment that involved interviewing and collaborating

with public health practitioners, doctors, community health workers, cancer nurses, and other

stakeholders at the hospital. Additionally, they maximized opportunities to build relationships

between community outreach workers and hospital-based providers by inviting both to the training.

Given the diversity of the population that ‘Imi Hale served, there were three different training

modules created for the lay-patient navigators. Although there was variety in intervention

implementation, there were fourteen key navigator competencies that were consistently included

across all trainings.

IMPLEMENT

One of the reasons why ‘Imi Hale believes they were able to recruit and retain such dedicated

lay-patient navigators is because of their investment in building the navigators’ capacity through

ongoing training. The team sent their navigators to trainings that occurred in places like New York

City and Michigan so that their navigators could build their network and capacity. The team also

created a dynamic training atmosphere where they emphasized that the lay-patient navigators were

not expected to know everything necessary during the implementation process; rather, their role

was to be an active learner and nd the resources needed to assist their patients.

Implementation Science at a Glance

36

EVALUATE

With many programs and interventions, an important factor for sustainability is the

availability of funding. Aside from collaborating with community health centers and their

grant writers, who help in attaining continual funding, the navigation curriculum was

converted into a community college course where many navigators received training. Creating

a standardized training was useful in the move to certify navigators and establish mechanisms

for reimbursement for navigation services.

42

Additionally, the team used a task list to ensure

delity of program delivery. This list included tasks required across the cancer care continuum

related to evaluation and quality assurance of intervention implementation.

42

LESSONS LEARNED

Creating meaningful partnerships for successful program

implementation and thinking ahead about sustainability can

seem like a daunting task. However, the one lesson that the

team came to understand is that when you are proactive in

sharing the vision with everyone on your team, unexpected

resources and people power will subsequently present

themselves. When your team shares that vision of program

implementation, the tasks become less daunting.

Share your

vision with everyone

on your team.

Implementation Science at a Glance

37

Tailored Communication for Cervical Cancer Risk

While overall rates of cervical cancer have decreased in the United States, racial and ethnic

minorities face a greater incidence of and death from the disease.

41

The most widely used

screening for cervical cancer is the Pap test. Women who receive an abnormal Pap test result

are referred for follow-up testing (colposcopy).

41

Low rates of follow-up after an abnormal

Pap test result may contribute to the higher incidence of cervical cancer among low-income,

minority women. Interventions that target barriers faced by low-income minority women are

essential to redress cervical cancer.

Tailored Communication for Cervical Cancer Risk is a telephone counseling intervention

developed by Fox Chase Cancer Center. The intervention targeted at-risk women who received

an abnormal Pap test result and were scheduled for follow-up testing. Two to four weeks

prior to their colposcopy appointment, interviewers called each woman and, using a scripted

questionnaire, identied barriers to follow-up and provided tailored counseling messages.

The messages address these barriers and encouraged women to attend an initial colposcopy

appointment and, six and twelve months later, repeat Pap test and colposcopy appointments.

ASSESS

Delivering this intervention over the telephone leveraged readily accessible resources.

PREPARE

In advance of the project, Fox Chase Cancer Center created a scripted questionnaire to ensure

that the intervention was delivered as planned.

Fox Chase established partnerships with health care providers—particularly those in

leadership positions. By working with nurse managers, they learned about the clinic workow

and how best to manage nurses’ competing priorities during intervention implementation.

Additionally, their community engagement approach allowed them to identify different

populations’ perceived notions of the best times to call, how often to call, and the most

relevant and appropriate counseling messages to deliver.

IMPLEMENT

Many strategies supported the program’s successful implementation. For example, Fox Chase

engaged a new clinical team to conduct recruitment and deliver the intervention at an offsite

facility. This ensured that there was dedicated staff trained to deliver the intervention and

that support was available. Insights from community members and their review of the tailored

messages were also key in ensuring the intervention t the target population.

Implementation Science at a Glance

38

EVALUATE

The evaluation found the intervention to be successful in both increasing the number of

women who attended an initial colposcopy appointment as well as longer-term medical follow-

up.

41

Integrating the intervention into the standard care practice ensured its uptake and

sustainability. Hiring designated staff to deliver the intervention and engaging stakeholders

throughout the process further ensured program delity.

LESSONS LEARNED

Program director Suzanne Miller reected that involving

research staff, clinic leadership, and community members

from the onset supported their success. “It will be exhausting

and time consuming, but that is what is going to set you up

for success.”

Have all stakeholders

involved on day one.

Implementation Science at a Glance

39

LIVESTRONG

®

at the YMCA

Physical activity plays an essential role in enhancing the length and quality of life for cancer

survivors. Aside from cancer-specic outcomes, the benets of exercise include increased

exibility and physical functioning as well as improvements in patient-reported outcomes

such as fatigue.

The LIVESTRONG at the YMCA program was developed to improve the well-being of adult

cancer survivors following a cancer diagnosis.

43

The twelve-week physical activity program

included two ninety-minute personalized exercise sessions per week, delivered in a small,

supportive environment.

ASSESS

LIVESTRONG at the YMCA began as an evidence-informed physical activity program

that drew from studies showing that physical activity is safe for and beneficial to cancer

survivors.

43

Since then, LIVESTRONG at the YMCA has had wide-reaching impact and has

shown to improve the physical activity, fitness, quality of life, and reduce cancer-related

fatigue in its participants.

44

PREPARE

Although LIVESTRONG at the YMCA has spread across 245 different Y associations, the program

has maintained its centralized vision and program goal. Core components of the program—a

functional assessment at the beginning and end of the program and the delivery of the program

by a certied YMCA instructor—are consistently implemented across the local Ys.

YMCA-certied instructors are usually existing staff, external individuals, past participants,

or volunteers who meet the certication requirements. The exibility of who delivered the

program as well as the required training has allowed for the adoption of this program in 700

communities across the nation.

One of the challenges that LIVESTRONG at the YMCA faced is that its onsite mode of delivery

may make access to the program dicult for some YMCAs. YMCAs that did not have the

capacity to host the program due to a lack of trained staff or available exercise equipment were

able to sign on to an agreement with larger YMCAs to help build their readiness to implement.

Program directors at the Y attributed their continued success to meaningful partnerships they

formed and nurtured with LIVESTRONG. LIVESTRONG provides not only nancial support but

also additional services such as patient navigation and resource books. The Y integrated these

services into their usual operations.

Implementation Science at a Glance

40

IMPLEMENT

The national partnership between LIVESTRONG and YMCA is enhanced by strong local

partnerships. One key implementation strategy was a six-month capacity-building training

required prior to program delivery. A key aspect of this training focused on helping local Ys

collaborate with their community’s health system. These local-level partnerships engaged the Y

with cancer survivors, health care providers, and patients, expanding the reach of the program.

EVALUATE

The goal of LIVESTRONG at the Y is to reach 100,000 cancer survivors by the year 2022.

Participating YMCAs regularly collect data to track their progress and program delivery. The

YMCA will soon launch a centralized reporting system to help with consistent evaluations

across the Ys.

LESSONS LEARNED

When asked to reect on the factors that

contribute to the spread and uptake of

LIVESTRONG at the YMCA, program directors

and practitioners credited the time spent in

preparation and in giving organizations the

time to build the program. Investing in the

time necessary to identify local staff and

partners, develop a partnership pathway, and

sustain meaningful relationships was central

to their success.

You cannot undervalue

the laying of groundwork

and giving organizations the

time to build.

Implementation Science at a Glance

41

Implementation Resources

for Practitioners

Here are some resources to help further your implementation efforts.

CANCER PREVENTION AND CONTROL RESEARCH NETWORK – PUTTING PUBLIC HEALTH

EVIDENCE IN ACTION TRAINING

An interactive training curriculum to teach community program planners and health educators

to use evidence-based approaches, including how to adapt programs.

http://cpcrn.org/pub/evidence-in-action/#

CANCER CONTROL P.L.A.N.E.T. (PLAN, LINK, ACT, NETWORK WITH EVIDENCE-BASED TOOLS)

A portal that provides access to data and resources that can help planners, program staff, and

researchers design, implement, and evaluate evidence-based cancer control programs.

https://cancercontrolplanet.cancer.gov/planet

THE COMMUNITY GUIDE

A searchable collection of evidence-based ndings of the Community Preventive Services Task

Force. It is a resource to help select interventions to improve health and prevent disease in a

state, community, community organization, business, health care organization, or school.

www.thecommunityguide.org

CONSOLIDATED FRAMEWORK FOR IMPLEMENTATION RESEARCH –

TECHNICAL ASSISTANCE WEBSITE

A site created for individuals considering the use of this framework to evaluate an

implementation or design an implementation study.

http://crguide.org

DISSEMINATION AND IMPLEMENTATION MODELS IN HEALTH RESEARCH AND PRACTICE

An interactive database to help researchers and practitioners select, adapt, and integrate the

dissemination and implementation model that best ts their research question or practice problem.

http://dissemination-implementation.org

EXPANDNET/WHO SCALING-UP GUIDE

Tools that provide a more comprehensive examination of scaling-up. Includes guides,

worksheets, briefs, and more.

http://expandnet.net/tools.htm

HEALTHY PEOPLE 2020 EVIDENCE-BASED RESOURCES

A searchable database with interventions and resources to improve the health of your community.

www.healthypeople.gov/2020/tools-resources/Evidence-Based-Resources

Implementation Science at a Glance

42

LIVESTRONG AT THE YMCA

A physical activity program developed by the YMCA and the LIVESTRONG Foundation.

The program assists those who are living with, through, or beyond cancer to strengthen

their spirit, mind, and body.

www.ymca.net/livestrong-at-the-ymca

NIH EVIDENCE-BASED PRACTICE AND PROGRAMS

A collection of several databases and other resources with information on evidence-based

disease prevention services, programs, and practices with the potential to impact public health.

https://prevention.nih.gov/resources-for-researchers/dissemination-and-implementation-

resources/evidence-based-programs-practices#topic-13

PARTNERSHIPS ENGAGING STAKEHOLDERS TOOLKIT

A set of resources to help program planners who are working in partnerships to improve policy.

www.pmc.gov.au/sites/default/les/les/pmc/implementation-toolkit-3-engaging-stakeholders.pdf

PEW-MACARTHUR RESULTS FIRST INITIATIVE

A resource that brings together information on the effectiveness of social policy programs

from nine national clearinghouses.

https://www.pewtrusts.org/en/projects/pew-macarthur-results-rst-initiative

PROGRAM SUSTAINABILITY ASSESSMENT TOOL (PSAT)

A 40-question self-assessment that program staff and stakeholders can take to evaluate the

sustainability capacity of a program. Use the results to help with sustainability planning.

https://sustaintool.org

REACH, EFFECTIVENESS, ADOPTION, IMPLEMENTATION, AND MAINTENANCE (RE-AIM)

FRAMEWORK

Resources and tools for those wanting to apply the RE-AIM framework. Includes planning tools,

calculation tools, measures, checklists, visual displays, gures, an online RE-AIM module, and more.

http://www.re-aim.org

A REFINED COMPILATION OF IMPLEMENTATION STRATEGIES: (ERIC) PROJECT

A list of strategies you can use to implement your program.

https://implementation-science.biomedcentral.com/articles/10.1186/s13012-015-0209-1

RESEARCH-TESTED INTERVENTION PROGRAMS

A searchable database with evidence-based cancer control interventions and programs

specically for program planners and public health practitioners.

https://rtips.cancer.gov/rtips/index.do

UNITED NATIONS OFFICE ON DRUGS AND CRIME (UNODC) EVALUABILITY ASSESSMENTS

A template to examine whether your program can be evaluated in a reliable and credible way.

www.unodc.org/documents/evaluation/Guidelines/Evaluability_Assessment_Template.pdf

Implementation Science at a Glance

43

Glossary of Terms

ADAPTATION – The degree to which an evidence-based intervention is changed to suit the

needs of the setting or the target population.

8,13

ADOPTION – A decision to make full use of an innovation, intervention, or program as the

best course of action available. Also dened as the decision of an organization or community

to commit to and initiate an evidence-based intervention.

13

COMMUNITY-BASED PARTICIPATORY RESEARCH (CBPR) – A collaborative approach to

research that equally involves all partners in the research process and recognizes the unique

strengths that each brings. CBPR begins with a research topic of importance to the community

and aims to combine knowledge with action to drive social change to improve health

outcomes and eliminate health disparities.

45

CONSTRUCTS – Concepts developed or adopted for use in a theory. The key concepts of a

given theory are its constructs.

46

DE-IMPLEMENTATION – Reducing or stopping the use of a guideline, practice, intervention, or

policy in health care or public health settings.

47

DISSEMINATION SCIENCE – The study of targeted distribution of information and intervention

materials to a specic public health or clinical practice audience. The intent is to understand

how best to spread and sustain knowledge and the associated evidence-based interventions.

48

EVIDENCE-BASED INTERVENTION – Health-focused intervention, practice, program, or

guideline with evidence demonstrating the ability of the intervention to change a health-

related behavior or outcome.

4

FIDELITY – Degree to which an intervention or program is implemented as intended by the

developers and as prescribed in the original protocol.

8,9

IMPLEMENTATION OUTCOMES – The effects of deliberate and purposive actions to implement

new treatments, practices, and services. Implementation outcomes may include acceptability,

feasibility, adoption, penetration, appropriateness, cost, delity, and sustainability.

26

IMPLEMENTATION SCIENCE – The study of methods to promote the adoption and integration

of evidence-based practices, interventions, and policies into routine health care and public

health settings to improve the impact on population health.

IMPLEMENTATION STRATEGIES – Methods or techniques to enhance the adoption,

implementation, and sustainability of a program or practice.

21,49

KNOWLEDGE SYNTHESIS – A process for obtaining and summarizing scientically derived

information, including evidence of effectiveness (risk and protective factors, core components,

and key features, etc.).

18

Implementation Science at a Glance

44

KNOWLEDGE TRANSLATION – The process of converting scientic and technically complex

research into everyday language and applicable actionable concepts in the practice setting.

18

REACH – The absolute number, proportion, and representativeness of individuals who

participate in a given initiative or receive a specic intervention.

26

SCALE-UP – Deliberate efforts to increase the spread and use of innovations successfully

tested in pilot or experimental projects to benet more people and to foster policy and

program development.

38

SUSTAINABILITY – The continued use of program components and activities to achieve

desirable outcomes.

35

SERVICE EFFECTIVENESS OUTCOMES – Intervention results examined at the system level,

including eciency, safety, effectiveness, equity, patient-centeredness, and timeliness.

26,50

Implementation Science at a Glance

45

References

1. About Implementation Science (IS). National Cancer Institute: Division of Cancer Control &

Population Sciences. https://cancercontrol.cancer.gov/IS/about.html. March 8, 2018.

2. Cabinet Implementation Unity Toolkit: Engaging Stakeholders. Australian Government.

https://www.pmc.gov.au/sites/default/les/les/pmc/implementation-toolkit-3-engaging-

stakeholders.pdf. June 2013.

3. Identifying and managing internal and external stakeholder interests. HealthKnowledge.

https://www.healthknowledge.org.uk/public-health-textbook/organisation-

management/5b-understanding-ofs/managing-internal-external-stakeholders.

4. Chambers DA, Vinson CA, Norton WE. Advancing the Science of Implementation Across the

Cancer Continuum. New York, NY: Oxford University Press; 2019.

5. Brownson RC, Fielding JE, Maylahn CM. Evidence-based public health: a fundamental

concept for public health practice. Annu Rev Public Health. 2009;30:175-201. doi:10.1146/

annurev.publhealth.031308.100134.

6. Session 1: Dening Evidence. Putting Public Health Evidence in Action Training Workshop.

Cancer Prevention and Control Research Network (CPCRN). http://cpcrn.org/pub/evidence-

in-action/. November 2017.

7. Sattereld JM, Spring B, Brownson RC, et al. Toward a transdisciplinary model of evidence-

based practice. Milbank Q. 2009;87(2):368-390. doi:10.1111/j.1468-0009.2009.00561.x.

8. Rabin BA, Brownson RC, Haire-Joshu D, Kreuter MW, Weaver NL. A glossary for

dissemination and implementation research in health. J Public Health Manag Pract.