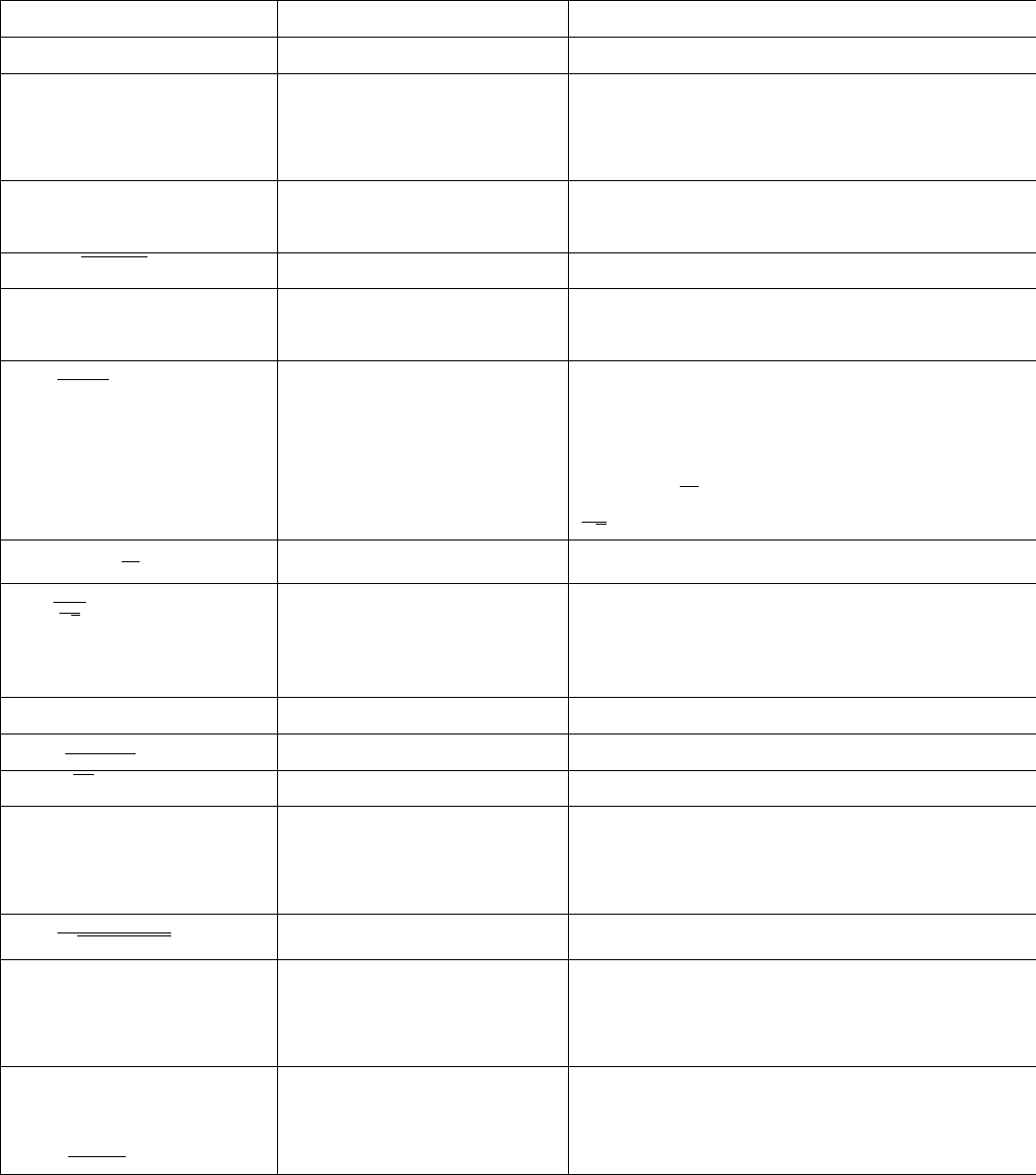

Basic Statistical Notation

Notation Meaning Remark

y random variable it takes different values with probabilities

µ = E(y) = Σ

j

y

j

f(y

j

) population mean the center of a distribution

weighted average of possible values (y

j

)

weight is probability (f(y

j

))

σ

2

= var(y) = E(y −µ)

2

population variance the dispersion of the distribution

distribution is wide if var(y) is big

σ =

√

var(y) standard deviation (sd) another measure of dispersion

{y

1

, y

2

, . . . , y

n

} sample we get a random or i.i.d sample if

E(y

i

) = µ, var(y

i

) = σ

2

, cov(y

i

, y

j

) = 0, ∀i, j

¯y =

Σ

n

i=1

y

i

n

sample mean estimate for population mean

it is a random variable

E(¯y) = µ if we use random sample

var(¯y) =

σ

2

n

if we use random sample

σ

√

n

is standard error (se) of ¯y

¯y ∼ N

(

µ,

σ

2

n

)

central limit theorem it holds when n is big

t =

¯y−µ

σ

√

n

t value standardized ¯y

t ∼ N(0, 1) when n is big

big t value rejects a hypothesis

2P r(T > |t|) p-value for two-tailed test small p value rejects a hypothesis

s

2

=

Σ(y

i

−¯y)

2

n−1

sample variance estimate for population variance

s =

√

s

2

sample standard deviation

cov(x, y) population covariance measure of association

= E((x − µ

x

)(y − µ

y

)) x and y are positively correlated if cov > 0

x and y are negatively correlated if cov < 0

ρ =

cov(x,y)

√

var(x)var(y)

correlation (coefficient) −1 ≤ ρ ≤ 1

y = β

0

+ β

1

x + u simple regression y is dependent variable

x is independent variable (regressor)

u is error term (other factors)

E(y|x) = β

0

+ β

1

x PRF we assume E(u|x) = 0 which implies

β

0

= E(y|x = 0) intercept (constant) term cov(x, u) = 0 (exogeneity, ceteris paribus)

β

1

=

dE(y|x)

dx

slope ∆E(y|x) = β

1

when ∆x = 1

1

Some Useful Intuitions

Let c denotes a constant number, and x and y denote two random variables

1. The expectation or mean value (E or µ) means “average”. It measures the central

tendency of the distribution of a random variable.

2. By definition, variance is the average squared deviation:

var(x) = E[(x − µ

x

)

2

].

Variance is big when x varies a lot. Variance cannot be negative.

3. Since a constant has zero variation, we have var(c) = 0

4. Since variance is average squared something, we have var(cx) = c

2

var(x)

5. We have (a + b)

2

= a

2

+ b

2

+ 2ab. Similarly we can show

var(x + y) = var(x) + var(y) + 2cov(x, y).

Do not forget the cross product or covariance.

6. Covariance measures (linear) co-movement. Since c stays constant no matter how x

moves, we have cov(x, c) = 0.

7. Formally, covariance is the average pro duct of deviation of x from its mean and devi-

ation of y from its mean:

cov(x, y) = E[(x − µ

x

)(y − µ

y

)].

The covariance is positive if both x and y move up beyond their mean values, or both

move below their mean values. The covariance is negative if one moves up, while the

other moves down. In short, covariance is positive if two variables move in the same

direction, while negative when they move in opposite direction.

8. Two variables are uncorrelated if covariance is zero.

9. For example, from eco201, we know price and quantity demanded are negatively cor-

related, while price and quantity supplied are positively correlated.

2

10. The link between variance and covariance is that cov(x, x) = var(x)

11. Variance and covariance are not unit-free, i.e., they can be manipulated by changing

the units. For example, we have var(cx) = c

2

var(x) and cov(cx, y) = ccov(x, y)

12. By contrast, the correlation coefficient (ρ or corr) cannot be manipulated since it stays

the same after we multiply x by c :

ρ

cx,y

=

cov(cx, y)

√

var(cx)

√

var(y)

=

ccov(x, y)

√

c

2

var(x)

√

var(y)

= ρ

x,y

13. In a similar fashion we can show the OLS estimator

ˆ

β =

S

xy

S

2

x

is not unit-free, so can be

manipulated, while the t-value is unit-free and cannot be manipulated. That is why

we want to pay more attention to the correlation coefficient and t-value.

14. We have −

√

a

2

√

b

2

≤ ab ≤

√

a

2

√

b

2

, Similarly we can show or −

√

var(x)

√

var(y) ≤

cov(x, y) ≤

√

var(x)

√

var(y), or by using the absolute value |cov(x, y)| ≤

√

var(x)

√

var(y).

This implies that

−1 ≤ ρ

x,y

≤ 1

So the correlation coefficient is unit-free, moreover, it is also bounded between minus

one and one.

15. The equality holds (ρ = 1 or −1) only when x and y have perfect linear relationship

y = a + bx. In general, the relationship is not perfectly linear so we need to add the

error term: y = a + bx + u, then we have −1 < ρ

x,y

< 1. In short, the correlation

coefficient measures the degree to which two variables are linearly related.

16. The sample mean is in the middle of sample in the sense that positive deviation cancels

out negative deviation. As a result,

∑

(x

i

− ¯x) = 0

17. Treat ¯x as constant (since it has no subscript i) when it appears in the sigma notation

(summation). For instance,

∑

¯x = n¯x;

∑

¯xx

i

= ¯x

∑

x

i

3